EXOPLANET

OBSERVING

FOR

AMATEURS

Bruce

L. Gary

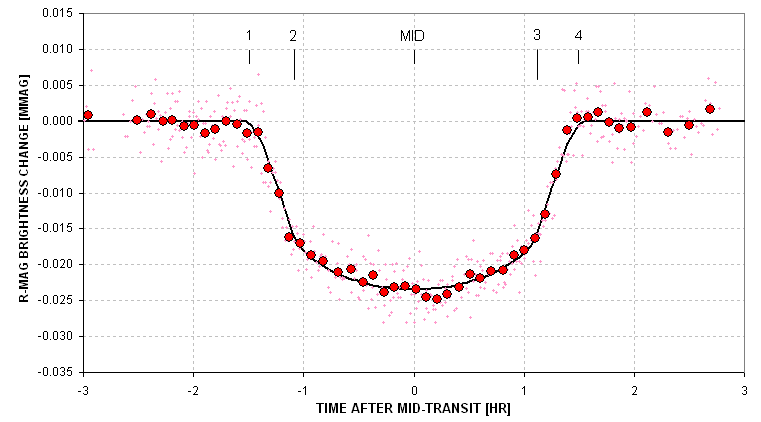

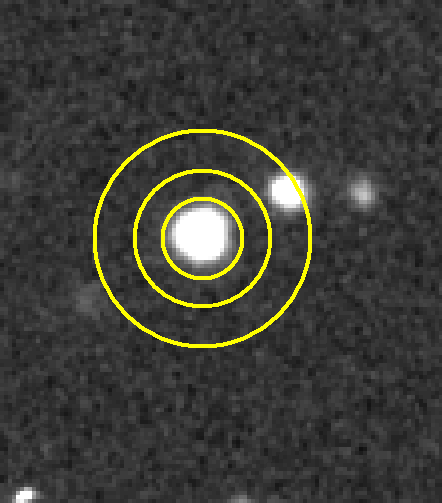

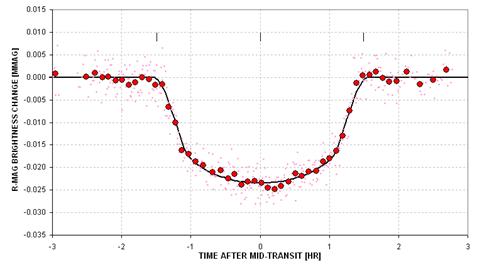

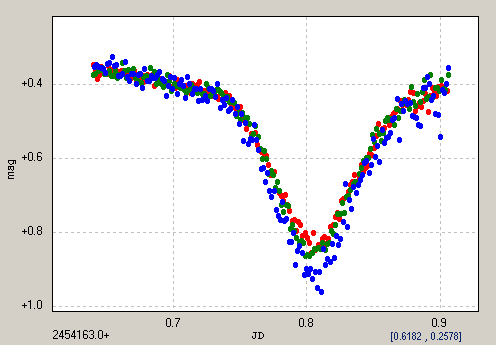

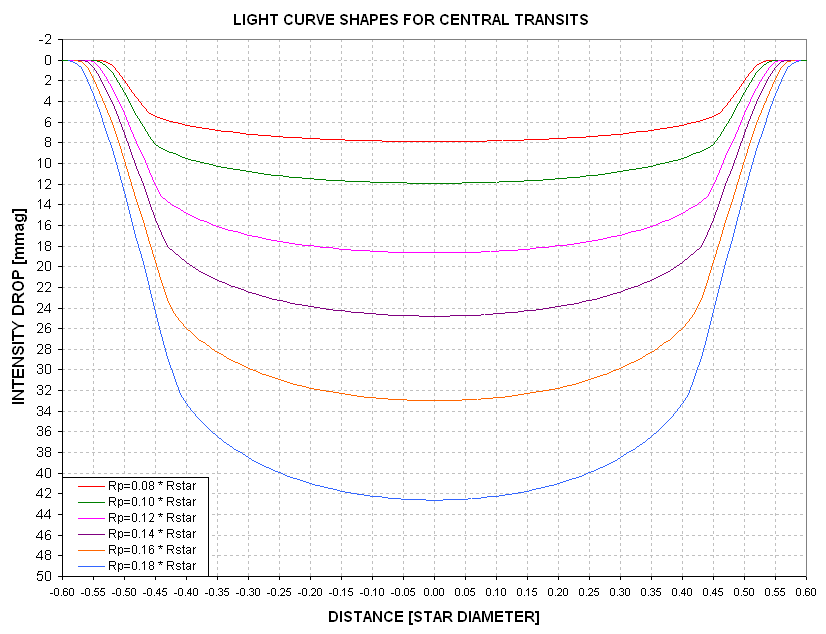

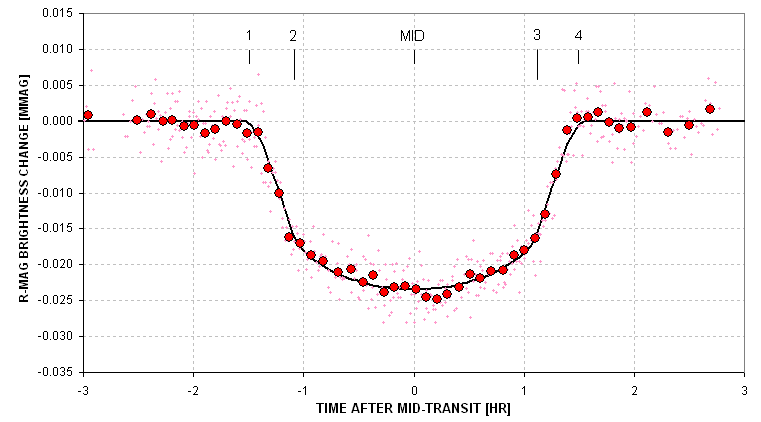

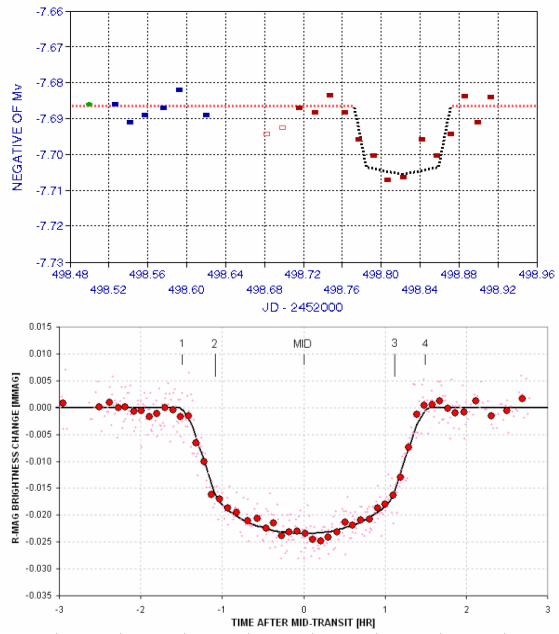

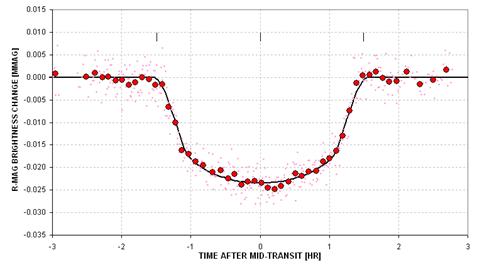

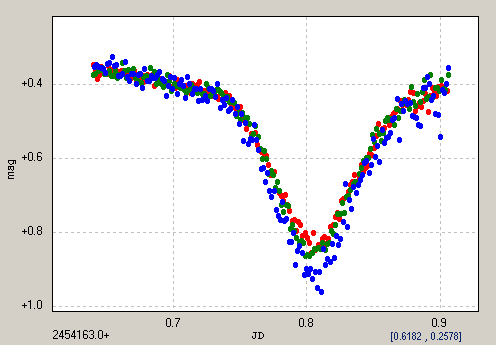

Author’s amateur

exoplanet light curve of XO-1 made in 2006 (average of two transits) with

a 14-inch telescope and R-band filter at his Hereford Arizona Observatory.

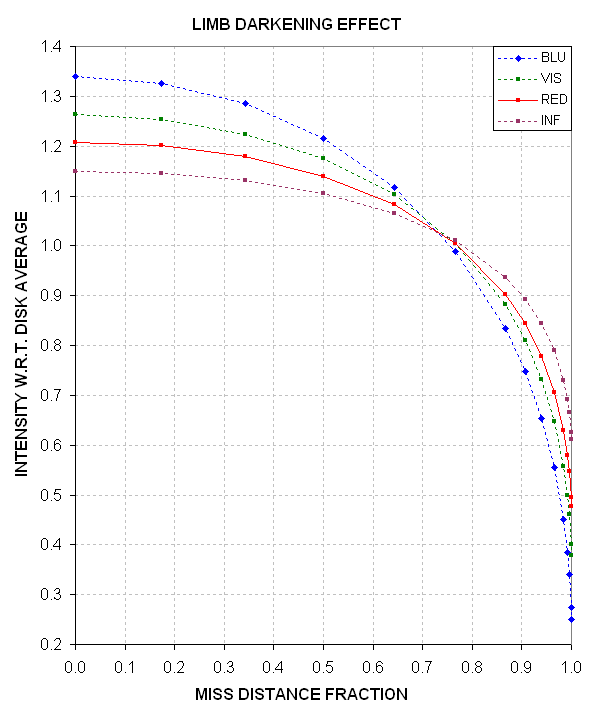

Exoplanet XO-1b moves in front of the star during contact 1 to contact

2, is obscuring ~2.2 % of the star’s disk between contacts 2 and 3, and

is moving off the star during contacts 3 to 4. The smooth variation between

contact 2 and 3 is produced by stellar “limb darkening.”

______________________________________________________________________________________________________________

Reductionist Publications,

d/b/a

5320 E. Calle Manzana

Hereford, AZ 85615

Other

Books by Bruce L. Gary

ESSAYS

FROM ANOTHER PARADIGM, 1992, 1993 (Abridged Edition)

GENETIC

ENSLAVEMENT:

A

CALL TO ARMS FOR INDIVIDUAL LIBERATION, 2004, 2006, 2008

THE

MAKING OF A MISANTHROPE: BOOK 1, AN AUTOBIOGRAPHY, 2005

A

MISANTHROPE’S HOLIDAY:

VIGNETTES AND STORIES, 2007

EXOPLANET

OBSERVING FOR AMATEURS, 2007

QUOTES

FOR MISANTHROPES: MOCKING HOMO HYPOCRITUS, 2007

THE

MAKING OF A MISANTHROPE: BOOK 2, MIDNIGHT

THOUGHTS (2009)

______________________________________________________________________________________________________________

Reductionist Publications,

d/b/a

5320 E. Calle Manzana

Hereford, AZ 85615

USA

Copyright 2007

by Bruce L. Gary

All rights reserved except for brief passages quoted

in a review. No part of this book may be reproduced, stored in a retrieval

system, or transmitted in any form and by any means: electronic, mechanical,

photocopying, recording or otherwise without express prior permission from

the publisher. Requests for usage permission or additional information

should be addressed to:

“Bruce L. Gary” <bgary1@cis-broadband.com>

or

Reductionist Publications, d/b/a

5320 E. Calle Manzana

Hereford, AZ

85615

First edition:

2007 August

Printed by

Mira Digital Publishing, St. Louis, MO

ISBN 978-0-9798446-3-8

______________________________________________________________________________________________________________

Dedicated to the

memory of

Carl

Sagan

A giant among men,

who would have loved the excitement of exoplanet discoveries, that would

have further inspired him to speculate about

life in the universe.

______________________________________________________________________________________________________________

C O N T E N T S

Preface

Introduction

1 Could I Do That?

2 Observatory Tour

3 Exoplanet Choices

4 Planning the Night

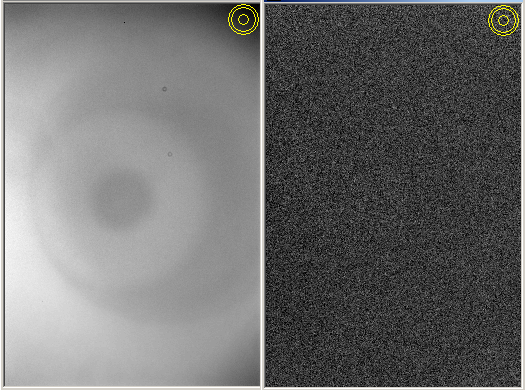

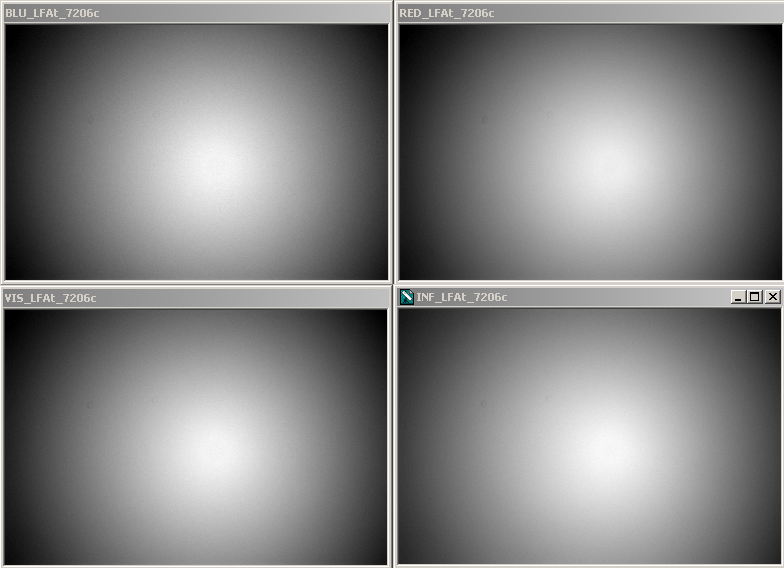

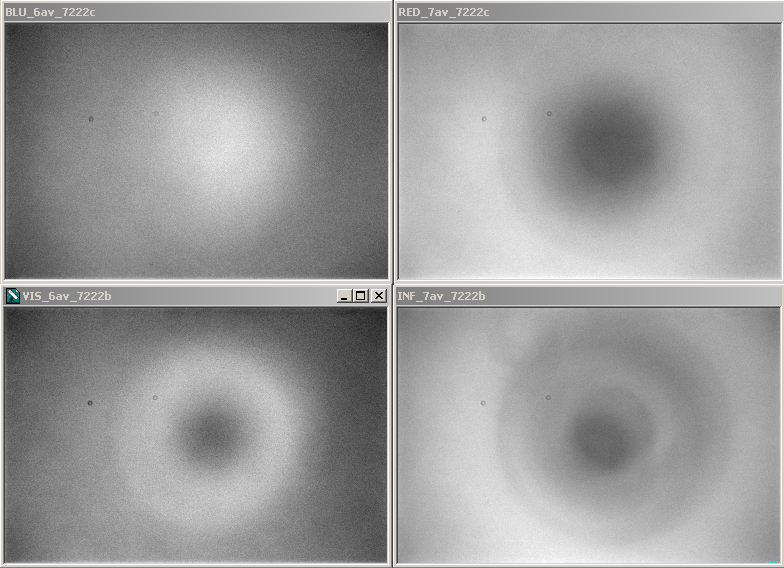

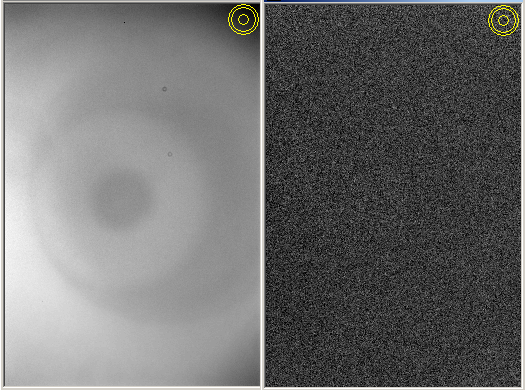

5 Flat Fields

6 Dark Frames

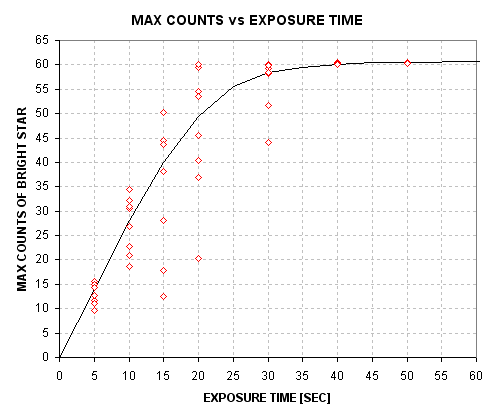

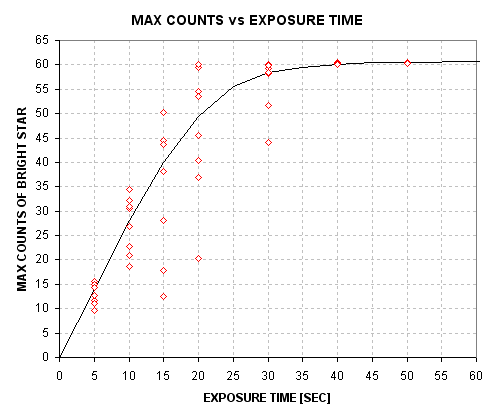

7 Exposure Times

8 Focus Drift

9 Autoguiding

10 Photometry

Aperture Size

11 Photometry

Pitfalls

12 Image Processing

13 Spreadsheet

Processing

14 Star Colors

15 Stochastic

SE Budget

16 Anomalies:

Timing and LC Shape

17 Optimum Observatory

Appendix A –

Flat Field Evaluation

Appendix B –

Selecting Target from Candidate List

Appendix C – Air

Mass from JD

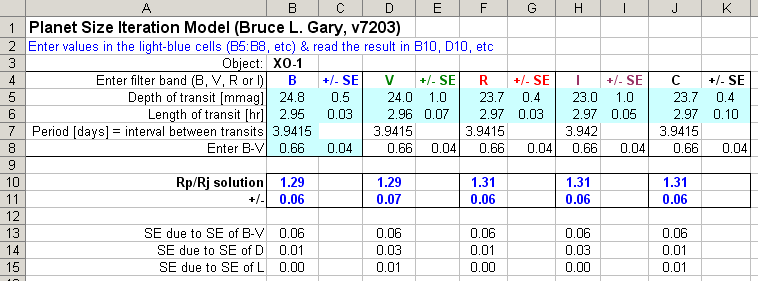

Appendix D -

Planet Size Model

Appendix E –

Measuring CCD Linearity

Appendix F –

Measuring CCD Gain

Appendix G –

Plotting Light Curve Data

Glossary

References

______________________________________________________________________________________________________________

─────────────────────────────────

PREFACE

─────────────────────────────────

The search for

planets orbiting other stars is interesting to even my daughters and neighbors.

Why the public fascination with this subject? I think it’s related to the

desire to find out if we humans are “alone in the universe.” This would explain

the heightened interest in exoplanet searchers to find Earth-like

planets. NASA and the NSF are keenly aware of this, and they are currently

formulating a “vision” for future funding that is aimed at Earth-like exoplanet

discoveries.

The author’s favorite

telescope, a Meade RCX400 14-inch on an equatorial wedge.

The public’s interest

in planets beyond our solar system may also account for Sky

and Telescope magazine’s interest in publishing an article about the

XO Project, a professional/amateur collaboration that found a transiting

exoplanet XO-1 (since then two more discoveries have been announced by this

project). The above picture, from the Sky and Telescope

article (September, 2006), helps make the point that amateur telescopes

are capable of providing follow-up observations of candidates provided by

professionals using wide-field survey cameras. The XO Project is a model

for future professional/amateur collaborations.

Astronomers, ironically,

have traditionally tried to remain aloof from things that excited the general

public. I recall JPL cafeteria conversations in the 1970s where I defended

Carl Sagan’s right to communicate his enthusiastic love for astronomy to

the public. There was a “pecking order” in astronomy at that time, which

may still exist to some extent, in which the farther out your field of study

the higher your status. Thus, cosmologists garnered the highest regard, and

those who studied objects in our solar system were viewed with the least

regard. My studies were of the moon, but I didn’t care where I was in this

hierarchy. At that time there was only one level lower than mine: those who

speculated about other worlds and the possibilities for intelligent life

on them.

How things change!

We now know that planets are everywhere in the galaxy. Billions upon billions

of planets must exist! This is the message from the tally of 248 extra-solar

planetary systems (as of mid-2007). Among them are 22 exoplanets that transit

in front of their star (15 that are brighter than 13th magnitude),

and the number is growing so fast that by the time this book appears the

number could be two or three dozen.

It is important

to realize that bright transiting exoplanets are far more valuable than

faint or non-transiting ones! The bright transits allow for an accurate

measure of the planet’s size, and therefore density; and spectroscopic investigations

of atmospheric composition are also possible (successful in two cases).

Even studies of the exoplanet’s atmospheric temperature are open for investigation.

When 2007 began, only 9 bright transiting exoplanets were known. Six months

later there were 14!

Few people realize

that part of the explosion of known transiting exoplanets can be attributed

to the role played by amateur astronomers. Three of the 15 bright transiting

exoplanets were discovered by the XO Project, which includes a team of

amateurs. During the past few decades, when professional observatories

have become more sophisticated and plentiful, it is ironic that amateurs

have kept pace, thanks to improvements in technology that’s within amateur

budgets, and we amateurs continue to make useful contributions. The discovery

of exoplanet is one of the most fruitful examples!

Not only are amateurs

capable of helping in the discovery of exoplanets through collaborations

with professionals, but amateurs are well-positioned to contribute to the

discovery of Earth-like exoplanets! This is explained in

Chapter 16.

How can this be?

After all, the professionals have expensive observatories at mountain tops,

and they use very sophisticated and sensitive CCD cameras. But with this

sophistication comes expensive operation on a per minute basis! With telescope

time so expensive, these highly capable facilities can’t be used for lengthy

searches. Moreover, big telescopes have such a small field-of-view (FOV)

that there usually aren’t any nearby bright stars within an image for use

as a “reference.” The optimum size telescope for most ground-based exoplanet

discovery has an aperture between 20 and 40 inches, as explained in Chapter

17. Such telescopes are within the reach of many amateurs. So far, most exoplanet

discovery contributions by amateurs have been with telescope apertures in

the 10 to 14 inches size range. Thousands of these telescopes are in use

by amateurs today.

This book is meant

for amateurs who want to observe exoplanet transits, and who may eventually

want to participate in exoplanet discoveries. There are many ways for amateurs

to have fun with exoplanets; some are “educational,” some could contribute

to a better understanding of exoplanets, and others are aimed at new discoveries.

The various options for exoplanet observing are explained in Chapter 3.

The advanced amateur

may eventually be recruited to become a member of a professional/amateur

team that endeavors to discover exoplanets. This might be the ultimate goal

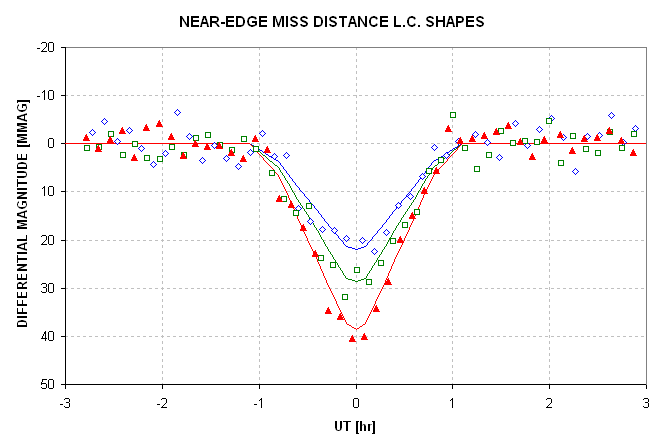

for some readers of this book. Let’s review how this works. A professional

astronomer’s wide-field survey camera, consisting of a regular telephoto

camera lens attached to an astronomer’s CDD,

monitors a set of star fields for several months before moving on to another

set of star fields. When a star appears to fade by a small amount for a short

time (e.g., <0.030 magnitude for ~3 hours), and when these fading events

occur at regular intervals (~3 days, typically), a larger aperture telescope

with good spatial resolution must be used to determine if the brightest

star in the survey camera’s image faded a small amount or a nearby fainter

star faded by a large amount (e.g., an eclipsing binary). Amateur telescopes

are capable of making this distinction since they can quickly determine

which star fades at the predicted times and how much it fades. As a bonus

the amateur observations can usually characterize the shape of the fading

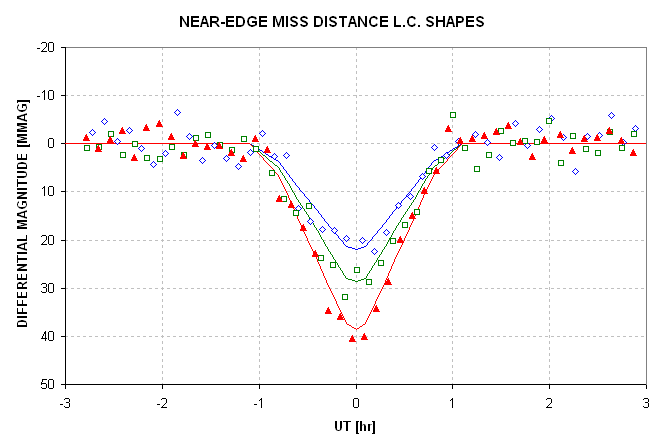

event, whether it is flat-bottomed or V-shaped. If the star that fades has

a depth of less than ~30 milli-magnitudes (mmag), and if the shape of the

fade is flat-bottomed, there is a good chance that a transiting exoplanet

has been identified. Armed with this information the professionals are justified

in requesting observing time on a large telescope to measure radial velocity

on several dates, and thereby solve for the mass of the secondary. If the

mass is small it must be an exoplanet.

As more wide-field

survey cameras are deployed by the professionals in a search for transiting

candidates there will be a growing need for amateur participation to weed

out the troublesome “blended eclipsing binaries.” This will allow the professionals

to focus on only the good exoplanet candidates for big telescope spectroscopic

radial velocity measurements.

The role amateurs

can play in this exploding field is exciting, but this role will require

that the amateur learn how to produce high-quality transit light curves.

A background in variable star observing would be helpful, but the exoplanet

requirements are more stringent, because the variations are so much smaller,

that a new set of observing skills will have to be mastered by those making

the transition. Image analysis skills will also differ from the variable

star experience. This book explains the new and more rigorous observing and

image analysis skills needed to be a partner with professionals in exoplanet

studies.

The reader is entitled

to know who I am, and my credentials for writing such a book. I retired

from 34 years employment by Caltech and assigned to work at the Jet Propulsion

Laboratory (JPL) for studies in planetary radio astronomy, microwave remote

sensing of the terrestrial atmosphere, and airborne sensing of the atmosphere

for studies of stratospheric ozone depletion. I have about 55 peer-reviewed

publications in various fields, and four patents on aviation safety using

microwave remote sensing concepts and an instrument that I developed. I

retired in 1998, and a year later resumed a childhood hobby of optical astronomy.

I was one of the first amateurs to observe an exoplanet transit (HD209458,

in 2002).

I have been a member

of the XO Project’s extended team (ET) of amateur observers from its inception

in 2004. The XO Project was created by Dr. Peter McCullough, a former amateur,

but now a professional astronomer at the Space Telescope Science Institute,

STScI. The XO project has announced the discovery of three exoplanets,

XO-1b, XO-2b and XO-3b. All members of the XO team are co-authors of the

announcement publications in the Astrophysical Journal.

I have worked with fellow ET members for 2.5 years, and I am familiar with

the issues that amateurs face when changing from variable star observing

to exoplanet transit observing. The XO Project is the only professional/amateur

collaboration for exoplanet discovery. It is my belief that it will soon

become generally recognized that the XO Project model for involving amateurs

is a cost-effective and very productive way to achieve results in the discovery

and study of exoplanets.

I want to thank

Dr. Steve Howell (National Optical Astronomy Observatory, Tucson, AZ) for

writing an article for The Planetary Society (Howell, 2002) after the discovery

of HD209458b, the first transiting exoplanet to be discovered (Charbonneau,

1999). In this article he explained how accessible exoplanet transit observing

is for amateurs, and this led to my first successful observation of an

exoplanet transit.

I also want to

thank Dr. Peter McCullough for inviting me to join the XO ET in December,

2004. In mid-2006 Chris Burke joined the XO Project, and 5 amateurs were

added to the original 4-member ET. Today the ET consists of the following

amateurs (names of the original ET are in bold): Ron Bissinger,

Mike Fleenor, Cindy Foote, Enrique Garcia, Bruce Gary, Paul Howell, Franco Mallia, Gianluca Masi and Tonny

Vanmunster. Thank you all, for this wonderful learning experience and

the fun of being part of a high-achieving team.

I am grateful to

the Society for Astronomical Sciences for permission to use figures in

this book that were presented on my behalf at their 2007 annual meeting

and published in the meeting proceedings. Thanks are also due Cindy Foote

for allowing me to reproduce her amazing light curves of an exoplanet candidate

made with 3 filters on the same night.

Almost all figures

are repeated in the “color center insert.”

─────────────────────────────────

INTRODUCTION

─────────────────────────────────

This book is intended

for use by amateur astronomers, not professional astronomers.

The distinction is not related to the fact that professional astronomers

understand everything in this book; it’s related to the fact that the professionals

don’t need to know most of what’s in this book.

Professionals don’t

need to know how to deal with telescopes with an imperfect polar alignment

(because their telescopes are essentially perfectly aligned). They don’t

have to deal with telescopes that don’t track perfectly (because their tracking

gears are close to perfect). They don’t have to worry about focus changing

during an observing session (because their “tubes” are made of low thermal

expansion materials). They don’t have to worry about CCDs with significant

“dark current” thermal noise (because their CCDs are cooled with liquid

nitrogen). Professionals don’t have to worry about scintillation noise (because

it’s much smaller with large apertures). Professionals can usually count

on sharp images the entire night with insignificant changes in “atmospheric

seeing” (because their observatories are at high altitude sites and the

telescope apertures are situated well above ground level). Professionals

also don’t have to deal with large atmospheric extinction effects (again,

because their observatories are at high altitude sites).

If a professional

astronomer had to use amateur hardware at an amateur site they would have

to learn new ways to overcome the limitations that amateurs deal with every

night. There are so many handicaps unique to the amateur observatory that

we should not look to the professional astronomer for help on these matters.

Therefore, amateurs should look for help from each other for solutions

to these problems. In other words, don’t expect a book on amateur observing

tips to be written by a professional astronomer; only another amateur can

write such a book.

I’ve written this

book with experience as both a professional astronomer and a post-retirement

amateur. Only the first decade of my professional life was in astronomy,

as a radio astronomer. The following three decades were in the atmospheric

sciences, consisting of remote sensing using microwave radiometers. Although

there are differences between radio astronomy and optical astronomy, and

bigger differences between atmospheric remote sensing with microwave radiometers

and optical astronomy, they share two very important requirements: 1) the

need to optimize observing strategy based on an understanding of hardware

strengths and weaknesses, and 2) the need to deal with stochastic noise

and systematic errors during data analysis.

This book was written

for the amateur who may not have the background and observing experience

that I brought to the hobby 8 years ago. How can a reader know if they’re

ready for this book? Here’s a short litmus test question: do you know the

meaning of “differential photometry”? If so, and if you’ve done it, then

you’re ready for this book.

Lessons Learned

One of the benefits

of experience is that there will be many mistakes and “lessons learned,”

and these can lead to a philosophy for the way of doing things. One of my

favorite philosophies is: KNOW THY HARDWARE! It takes time to learn the

idiosyncrasies of an observing system, and no observing system works like

it might be described in a text book. There usually are a myriad of little

things that can ruin the best planned observing session. Only through experience

with one particular observing system can these pitfalls be avoided. I therefore

encourage the serious observer to plan on a long period of floundering

before serious observing is begun. For example, during the floundering phase

try different configurations: prime focus, Cassegrain, use of a focal reducer,

placement of focal reducer, use of an image stabilizer, etc. During this

learning phase try different ways of dealing with finding focus, tracking

focus drift, auto-guiding, pointing calibration, etc. Keep a good observing

log for checking back to see what worked.

One of my neighbors

has a 32-inch telescope in an automated dome, and it’s a really neat facility.

But as he knows, I prefer to use my little 14-inch telescope (whenever

its aperture is adequate for the job) for the simple reason that I understand

most of the idiosyncrasies of my system, whereas I assume there are many

idiosyncrasies of his system that I don’t understand.

At a professional

observatory the responsibility for “know thy hardware” is distributed among

many people. Their staff will include a mechanical engineer, an electrical

engineer, a software control programmer, an optician to perform periodic

optical alignment, someone to perform pointing calibrations and update coefficients

in the control software, a telescope operator, a handy man for maintaining

utilities and ground-keeping and a director to oversee the work of all

these specialists. Therefore, when an astronomer arrives for an observing

session, or when he submits the specifics of an observing request for which

he will not be present, all facets of “know thy hardware” have already

been satisfied.

In contrast, the

amateur observer fills all of the above job responsibilities. He is the

observatory “director,” he does mechanical and electrical calibration and

maintenance, he’s in charge of programming, pointing calibration, scheduling

and he’s the telescope operator – and the amateur is also his own “funding

agency.” Thus, when the amateur starts an observing session he has removed

his mechanical engineer hat, his programmer’s hat, and all the other hats

he wore while preparing the telescope system for observing, and he becomes

the telescope operator carrying out the observing request of the astronomer

whose hat he wore before the observing session began. The admonition to “know

thy hardware” can be met in different ways, as illustrated by the professional

astronomer many-man team and the amateur astronomer one-man team.

I once observed

with the Palomar 200-inch telescope, and believe me when I say that it’s

more fun observing with my backyard 14-inch telescope. At Palomar I handed

the telescope operator a list of target coordinates, motion rates and start

times, and watched him do the observing. I had to take it on faith that

the telescope was operating properly. With my backyard telescope I feel

“in control” of all aspects of the observing session; I know exactly how

the telescope will perform and I feel comfortable that my observing strategy

is a good match to the telescope system’s strengths and weaknesses. Based

on this experience I will allege that amateur observing is

more fun!

Another of my philosophies

is: GOOD DATA ANALYSIS IS JUST AS IMPORTANT AS GETTING GOOD DATA. It is

customary in astronomy, as well as many observing fields, to spend far more

time processing data than taking it. A single observing session may warrant

weeks of analysis. This is especially true when using an expensive observing

facility, but the concept also can apply to observations with amateur hardware.

One last Philosophy

I’ll mention is: WHEN YOU SEE SOMETHING YOU DON’T UNDERSTAND, WHILE OBSERVING

OR DURING DATA ANALYSIS: STOP, DON’T PROCEED UNTIL YOU UNDERSTAND IT. This

one is probably difficult to making a convincing case for unless you’ve

ignored the advice and wasted time with fundamentally flawed data or analysis

procedure. This advice is especially true if you’re writing a computer program

to process data, because program bugs are a part of every programming experience.

A corollary to this advice might be: Never believe anything you come up with,

even if it makes sense, because when there’s a serious flaw in your data

or analysis it may show itself as a subtle anomaly that could easily be ignored.

These are some

of the themes that will be a recurring admonition throughout this book. Some

readers will find that I’m asking them to put too much work into the process.

My advice may seem more appropriate for someone with a professional dedication

to doing things the right way. If this is your response to what I’ve written,

then maybe you’re not ready yet for exoplanet transit observing. Remember,

if it’s not fun, you probably won’t do a good job. If you don’t enjoy floundering

with a telescope, trying to figure out its idiosyncrasies, then you probably

won’t do a good job of learning how to use your telescope properly. This

hobby should be fun, and if a particular project seems like work, then consider

a different project! Astronomy is one of those hobbies with many ways to

have fun, and I dedicate this book to those advanced amateurs who like having

fun with exoplanet transit observing.

─────────────────────────────────

Chapter

1

”Could

I do that?”

─────────────────────────────────

“Could I do that?”

was my reaction 5 years ago to an article claiming that amateurs could

observe exoplanet transits (Howell, 2002).

The article stated

that transits of HD209458 had even been measured with a 4-inch aperture

telescope. Could this be true, or was it hype for selling magazines? The

article appeared in The Planetary Society’s The Planetary Report,

which was a reputable magazine. I had a Meade 10-inch LX200 telescope and

a common CCD camera which I had just begun to use for variable star observing.

“Why not?” I decided,

with nothing to lose for trying.

My First Transit

Observation in 2002

Before the next

transit on the schedule I e-mailed the author of the article, Dr. Steve

Howell, and asked if he had any advice. He suggested using a filter, such

as V-band (green), and “keep the target near the center of the image.”

On the night of

August 11, 2002, I spent about

9 hours taking images of HD209458 with a V-band filter. The next day I

processed the images and was pleasantly surprised to see a small “dip”

in my plot of brightness versus time that occurred “on schedule” (Fig. 1.01).

The depth was also about right, but since my observations were “noisy”

they were really a “detection” instead of a measurement. Nevertheless,

it felt good to join a club of about a half-dozen amateurs who had detected

an exoplanet transit.

By today’s standards

my CCD was unimpressive (slow downloads, not a large format) and my telescope

was average. The only thing advanced was my use of MaxIm DL (version 3.0)

for image processing. Even my spreadsheet was primitive (Quattro Pro 4.0).

Today there must be 1000 amateurs with better hardware than I had 5 years

ago, based on membership numbers of the AAVSO (American Association for

Variable Star Observers).

I recall thinking

“If only there was a book on how to observe exoplanet transits.” There

couldn’t be such a book, of course, since the first amateur observation

of HD209458 had been made less than 2 years earlier by a group in Finland led by Arto Oksanen

(http://www.ursa.fi/sirius/HD209458/HD209458_eng.html). Besides, this was

the only known transiting exoplanet at that time. Moreover, not many amateurs

had a 16-inch telescope like the one used by Oksanen’s team. The idea of

amateurs observing an exoplanet transit was a “novelty.” But that was then,

and this is now!

I now “know what

to do”; to see what a difference that makes look at the next figure.

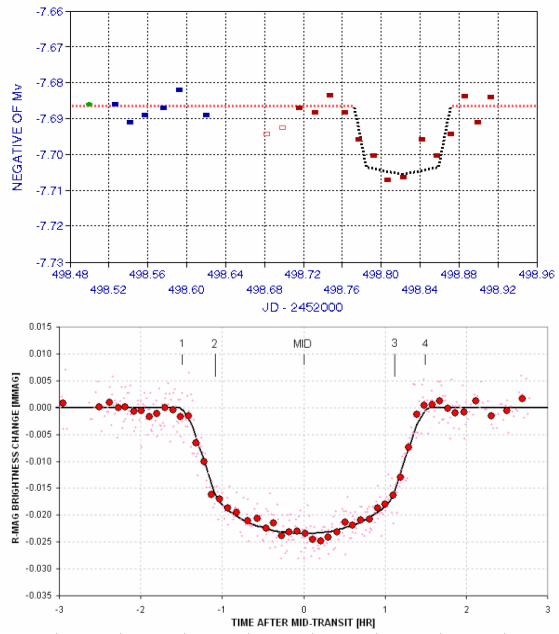

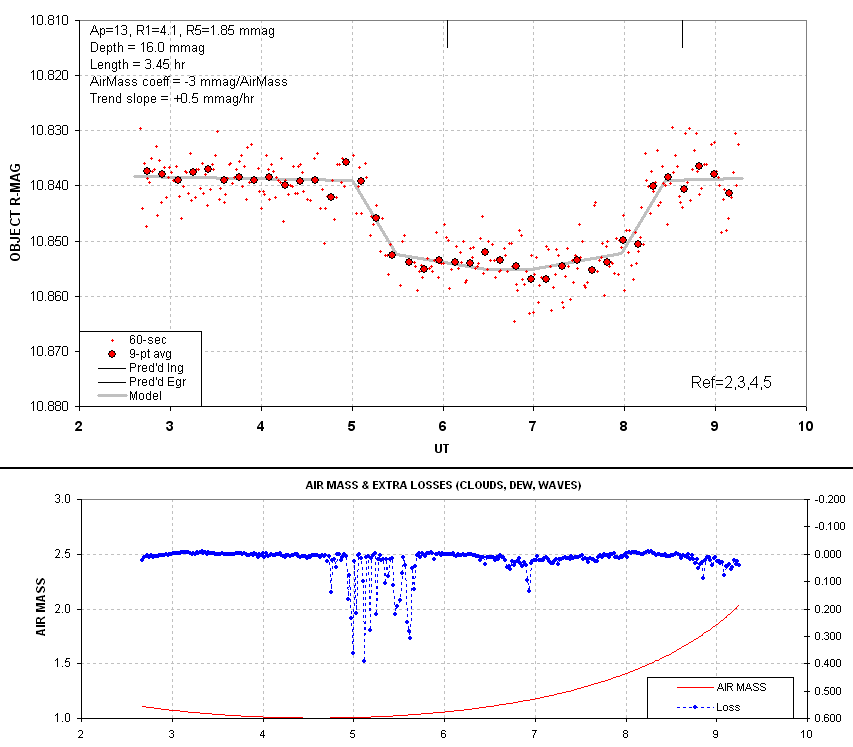

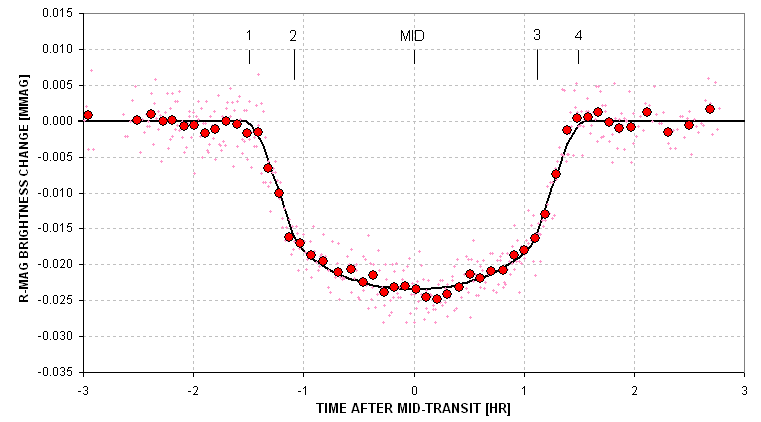

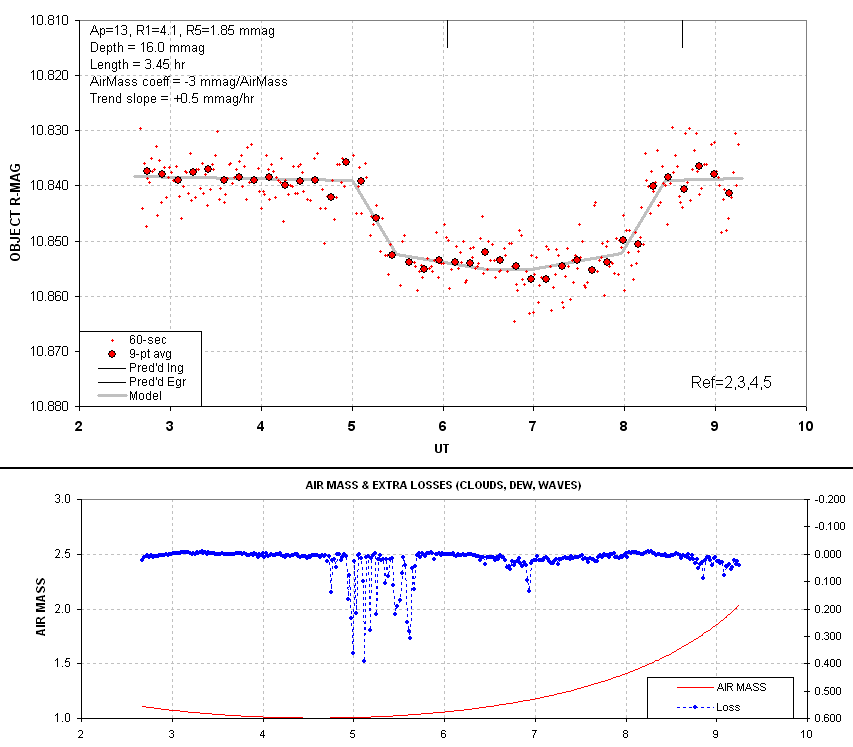

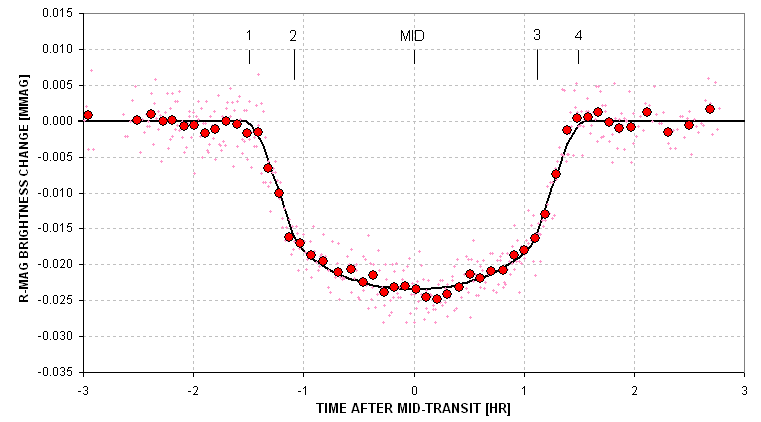

Figure 1.01. Knowing

what to do makes a difference. Upper panel: my first light curve of HD209458,

made 2002 August 12. Lower panel: a recent light curve of XO-1 made in

2006 (average of March 14 and June 1 transits).

During the past

5 years my capability has improved ~70-fold, and most of this is due to

improved technique. Although I now use a 14-inch telescope if I were to

use the same 10-inch that I used 5 years ago for my first exoplanet transit

I could achieve in one minute what took me 15 minutes to do back then. Some

of this improvement is due to use of a slightly improved CCD, and some is

from use of a tip/tilt image stabilizer, but most of the improvement is

due to improved techniques for observing, image processing and spreadsheet

analysis. These are things that can be shared with other amateurs in a book.

That’s the book I wanted 5 years ago. You are now holding such a book. It

is based on 5 years of floundering and learning. It can save you from lots

of time with “trial and error” observing and processing ideas, and give

you a 15-fold advantage that I never had for my first exoplanet transit

observation.

Minimum Requirements

for Exoplanet Transit Observing

You don’t have

to live on a mountain top to observe exoplanet transits. My 2002 transit

observation was made from my backyard in Santa Barbara, CA, located only

200 feet above sea level. Dark skies are also not even a requirement; my

Santa Barbara residence was

within the city, and my skies didn’t even resemble “dark” until after midnight. For pretty picture

imaging, where dark skies matter more, I disassembled my telescope and put

it in my trunk for a drive to the nearby mountains. I now live in Arizona, but my darker

skies are only a bonus, not a requirement.

What about “seeing”?

Good atmospheric seeing is nice, but again it’s not a requirement. I actually

had more moments of good seeing in Santa Barbara than here in Arizona, at a 4660 foot

altitude site. In fact, some of the sharpest images of planets come from

Florida and Singapore, both sea level

sites. Seeing is mostly influenced by winds at ground level, and the height

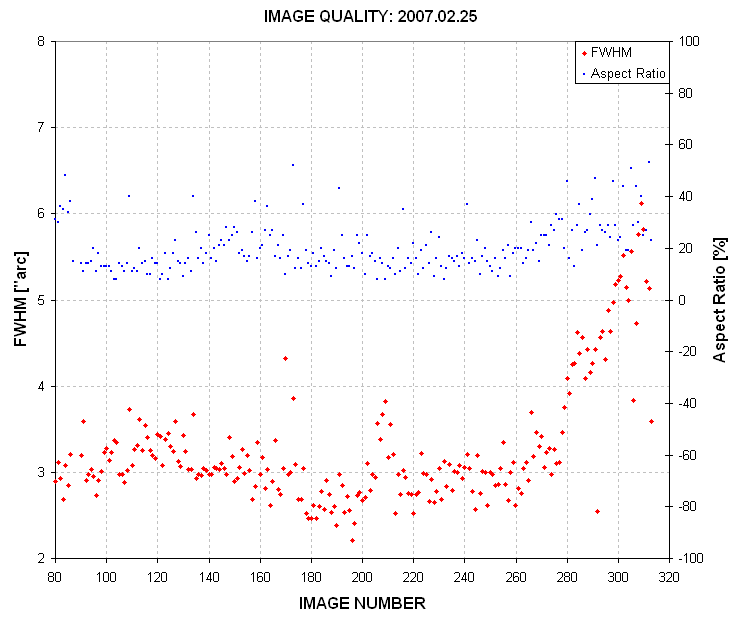

of the telescope above ground. My median seeing in Arizona is ~3.0 “arc for

typical exposure times (30 to 60 seconds).

Telescope aperture

matters, yes, but an 8-inch aperture is adequate for the brighter transiting

exoplanets (10th magnitude). For most transiting exoplanets

a 12-inch aperture is adequate. Since the cost/performance ratio increases

dramatically for apertures above 14 inches, there are a lot of 14-inch telescopes

in amateur hands. I’ve never owned anything larger, and everything in this

book can be done with this size telescope. My present telescope is a 14-inch

Meade LX200 GPS. You’ll need a “super wedge” for equatorial mounting.

CCD cameras are

so cost-effective these days that almost any astronomical CCD camera now

in use should be adequate for exoplanet observing. If you have an old 8-bit

CCD, that’s not good enough; you’ll have to buy a 16-bit camera. For a bigger

field-of-view, consider spending a little more for a medium-sized chip CCD

camera. My CCD is a Santa Barbara Instrument Group (SBIG) ST-8. You’ll need

a color filter wheel for the CCD camera, and this is usually standard equipment

that comes with the camera.

Although I recommend

use of a tip/tilt image stabilizer it’s definitely not a requirement. Few

people use such a device for removing small, fast movements of the star

field.

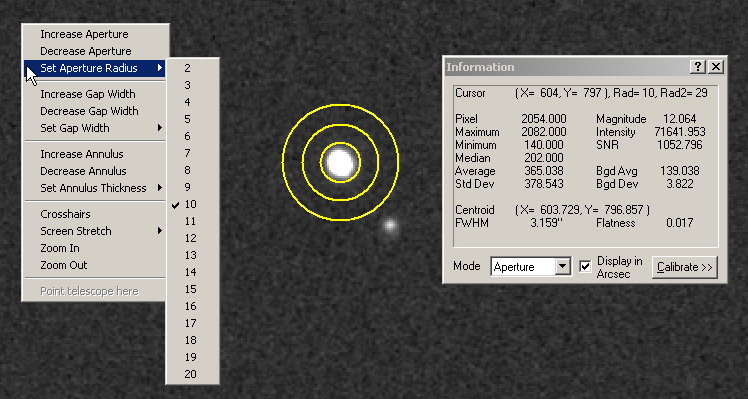

Software! Yes,

software is a requirement and your choice can be important. I’ve been using

MaxIm DL/CCD for 6 years, and it’s an impressive program that does everything.

MDL, as I’ll refer to it, controls the telescope, the telescope’s focuser,

the CCD, the color filter wheel and the image stabilizer if you have one.

It also does an excellent job of image processing, and after it performs

a photometry analysis you may use it to create a text file for import to

a spreadsheet. Other exoplanet observers use AIP4WIN, and it also does a

good job. CCDSoft might do the job, but I find it lacking in user-friendliness

and capability.

Spreadsheets are

an important program you’ll need to use. Every computer with a Windows

operating system comes with Excel, and even though Excel seems constructed

to meet the needs of an executive who wants to make a pie chart showing

sales, it also is a powerful spreadsheet for science. I’ve migrated all

my spreadsheet work to Excel. That’s what I assume you’ll be using in Chapter

13.

Previous Experience

Whenever an amateur

astronomer considers doing something new it is natural to ask if previous

experience is adequate, especially if there is no local astronomy club

with experienced members who can help out with difficult issues. Some people

prefer to learn without help, and I’m one of them. The astronomy clubs I’ve

belonged to emphasized the eyepiece “Wow!” version of amateur astronomy,

so help was never available locally. This will probably be the case for

most amateurs considering exoplanet observing. Being self-taught means you

spend a lot of time floundering! Well, I like floundering! I think that’s

the best way to learn. Anyone reading these pages who also likes floundering

should consider setting this book aside, with the intention of referring

to it only when floundering fails. For those who don’t like foundering, then

read on.

The best kind of

amateur astronomy experience that prepares you for producing exoplanet

light curves is variable star observing using a CCD. “Pretty pictures”

experience will help a little, since it involves dark frame and flat frame

calibration. But variable star observing requires familiarity with “photometry,”

and that’s where previous experience is most helpful.

One kind of photometry

of variable stars consists of taking an image of stars that are known to

vary on month or longer time scales, and submitting measurements of their

magnitude to an archive, such as the one maintained by the AAVSO. Another

kind of variable star observing, which requires more skill, is monitoring

variations of a star that changes brightness on time scales of a few minutes.

For example, “cataclysmic variables” are binaries in which one member has

an accretion disk formed by infalling gas from its companion. The stellar

gas does not flow continuously from one star to the other, but episodes

of activity may occur once a decade, approximately. An active period for

gas exchange may last a week or two, during which time the star is ~100

times brighter than normal. The cataclysmic variable rotates with a period

of about 90 minutes, so during a week or more of heightened activity the

bright spot on the accretion disk receiving gas from its companion will

rotate in and out of view, causing brightness to undergo large “superhump”

variations every rotation (90 minutes). The amplitude of these 90-minute

variations is of order 0.2 magnitude. Structure is present that requires

a temporal resolution of a couple minutes.

Any amateur who

has observed cataclysmic variable superhumps will have sufficient experience

for making an easy transition to exoplanet observing. Amateurs who have

experience with the other kind of observing, measuring the brightness of

a few stars a few times a month, for example, will be able to make the transition

to exoplanet observing, but it will require learning new skills. Someone

who has never performed photometry of any stars may want to consider deferring

exoplanet observing until they have some of the more traditional photometry

experience.

I’ll make one exception

to the above required experience level description. Anyone with work experience

making measurements and performing data analysis, regardless of the field,

is likely to have already acquired the skills needed for exoplanet monitoring,

even if they have never used a telescope. For example, before retiring

I spent three decades making measurements and processing data as part of

investigations within the atmospheric sciences. I think that experience

alone would have been sufficient background for the astronomy hobby that

I started 8 years ago. I’ll agree that my amateur astronomy experience

when I was in high school (using film!) was helpful. And I’ll also agree

that my decade of radio astronomy experience 4 decades ago was also helpful,

but the differences between radio astronomy and optical astronomy are considerable.

For anyone who has never used a telescope, yet has experience with measurements

and data analysis, I am willing to suggest that this is adequate for “jumping

in” and starting exoplanet observing without paying your dues to the AAVSO

conducting variable star observations! The concepts are straightforward

for anyone with a background in the physical sciences.

What are the “entry

costs” for someone who doesn’t own a telescope but who has experience with

measurements and data analysis in other fields? Here’s an example of what

I would recommend as a “starter telescope system” for such a person:

Meade 10-inch telescope

monochrome 16-bit CCD with color filters

equatorial wedge for polar mounting

Maxim DL/CCD

Total cost about $5000

Celestron telescopes

are another option, but their large aperture telescopes (>8-inch) are

mounted in a way that requires “meridian flips” and these can ruin the light

curve from a long observing session.

It has been estimated

that tens of thousands of astronomical CCD cameras have been sold during

the past two decades, and most of these were sold to amateur astronomers.

The number of telescopes bought by amateurs is even higher. Many of these

amateur systems are capable of observing exoplanet transits. Amateur astronomy

may not be the cheapest hobby, but there are many more expensive ones. With

the growing affordability of CCD cameras and telescopes, and a consequent

lowering of the $5000 entry level, the number of amateurs who may be tempted

by exoplanet observing in the near future may be in the thousands.

Imagine the value

of an archive of exoplanet transit observations with contributions from

several hundred amateurs. The day may come when every transit of every known

transiting exoplanet will be observed (except for those faint OGLE and very

faint HST ones). Changes of transit shape and timings are possible, and

these can be used to infer the existence of new planets, smaller and more

interesting ones. The job is too large for the small number of professional

observatories, and the cost of using them for this purpose is prohibitive.

If you are considering

a hobby that’s fun and scientifically useful, and if you’re willing to

learn new observing skills and spend time processing a night’s images,

then welcome to the club of amateur exoplanet observers.

─────────────────────────────────

Chapter

2

Observatory

Tour

─────────────────────────────────

Since I will

be using real data to illustrate systematic errors I will describe my observing

systems. Note the use of the word "systems" in the plural form. Even with

one telescope it will matter whether you are configured Cassegrain or prime

focus, and whether a dew shield is used, or whether a focal reducer lens

is used, and where it's inserted. Every change of configuration will change

the relative importance of the various systematic error sources. During

the past year I have had three different telescopes, so I am aware of issues

related to telescope design differences - such as the problems produced

by meridian flips (i.e., Celestrons). All of these telescopes have had 14-inch

apertures with catadioptic optics: Celestron CGE-1400, Meade RCX400 and

Meade LX200GPS. Most of my illustrations will be with the last one.

These are typical telescopes now in use by advanced amateurs for exoplanet

transit observations.

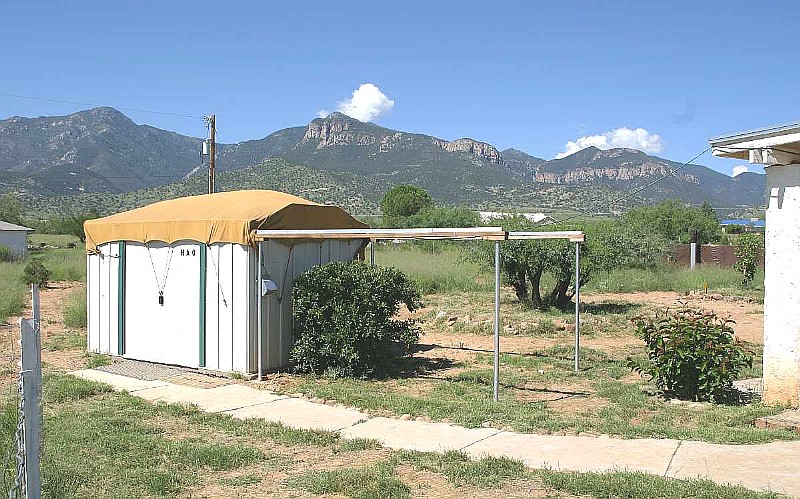

I use a sliding

roof observatory located in Southern Arizona, at an altitude of 4660 feet. Atmospheric extinction

values for B, V, R and I bands are typically 0.25, 0.16, 0.13 and 0.08

magnitude per air mass.

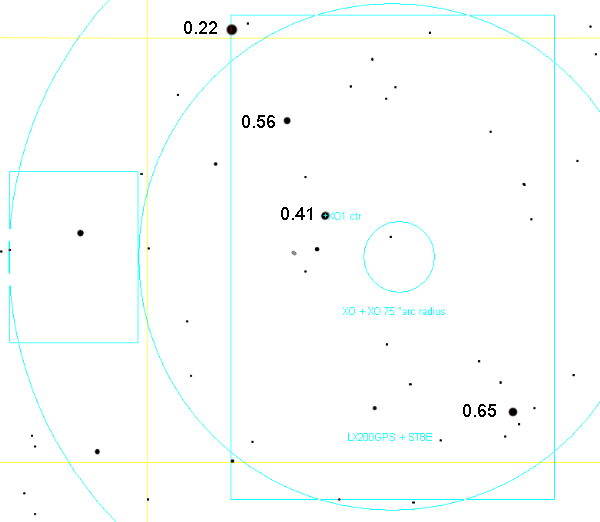

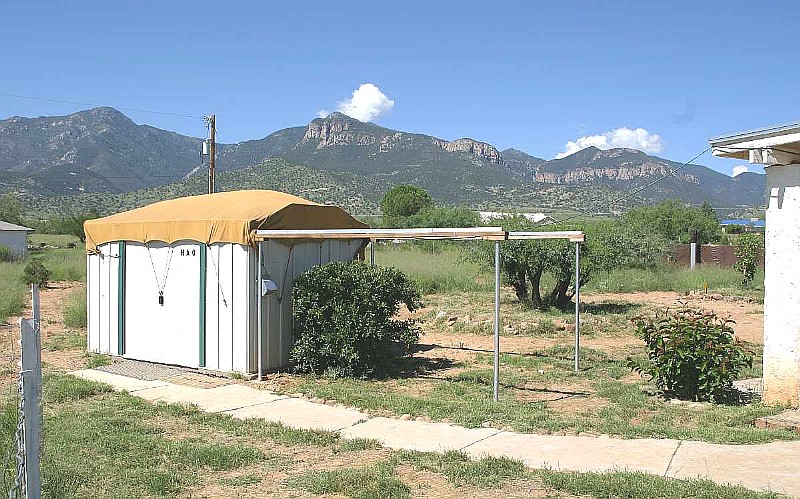

Figure 2.01 “Hereford Arizona Observatory” with a canvas-covered

sliding roof. The Minor Planet Center has assigned it a site code of

G95.

All control

functions are performed by a computer in my house, using 100-foot cables

in buried conduit (the control room is shown as Fig.s 2.03 and 2.04). For

all Cassegrain configurations I use an SBIG AO-7 tip/tilt image stabilizer.

It can usually be run at ~5 Hz. My favorite configuration is Cassegrain

(next figure) that has back-end optics consisting of the AO-7, a focal reducer,

and a CFW attached to a SBIG ST-8XE CCD. This configuration provides a “plate

scale” of 0.67 ”arc per pixel (without binning). Since my “atmospheric seeing”

FWHM is usually 2.5 to 3.5 ”arc for typical exposure times (30 to 60 seconds)

there are ~ 4 to 5 pixels per FWHM, which is above the 3 pixel per FWHM requirement

for precision photometry. The FOV for this configuration is 17 x 11 ’arc.

The Meade

LX200GPS comes with a micro-focuser but I removed it in order to have sufficient

clearance of the optical backend with the mounting base to be able to observe

high declination targets. This configuration also allows me to reach the

north celestial pole which is needed for pointing alignment calibration.

Without the micro-focuser I need a way to make fine focus adjustments during

an observing session (even while continuing to observe a target). This has

been achieved by a wireless focuser (sold by Starizona) with the remote unit

physically attached to the mirror adjustment focusing knob and the local

unit connected to my computer.

Figure 2.02. My favorite configuration: AO-7, focal

reducer, CFW/CCD (SBIG ST-8XE). The telescope is a Meade LX200GPS 14-inch

aperture, f/10 (without a focal reduce.).

I also have

a wireless weather station, with the sensors at the top of a 10-foot pole

located near the sliding roof observatory (shown in Fig. 5.01). The pole

is wood and the communications are wireless because lightning is common

during our summer “monsoon season” (July/August). The weather station is

a Davis Vantage Pro 2, supplemented by their Weather Link program for computer

downloads from a data logger. This program produces graphical displays

of all measured parameters: outside air temperature, dew point, barometric

pressure, rain accumulation, and wind maximum and average (for user-specified

intervals, which I’ve chosen to be 5 minutes). I find the graphs of wind

and temperature to be very useful during an observing session.

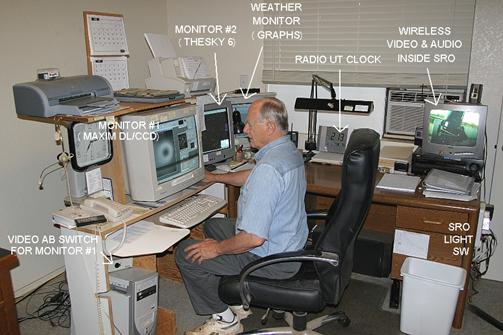

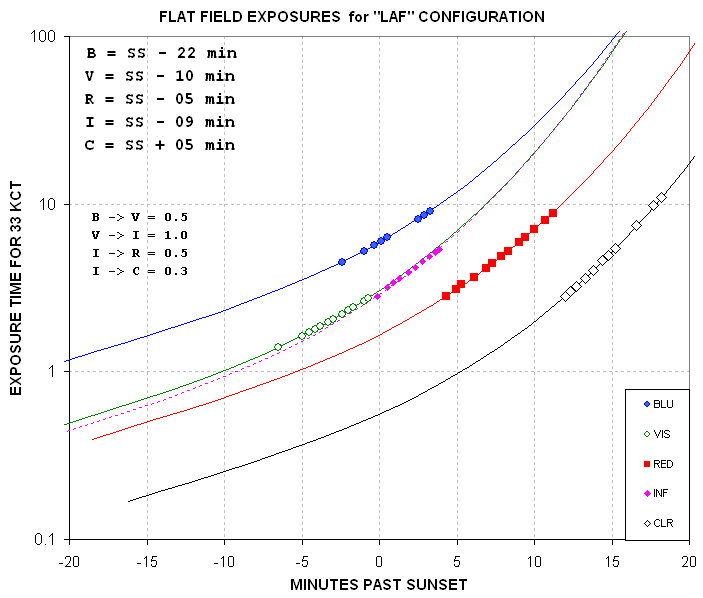

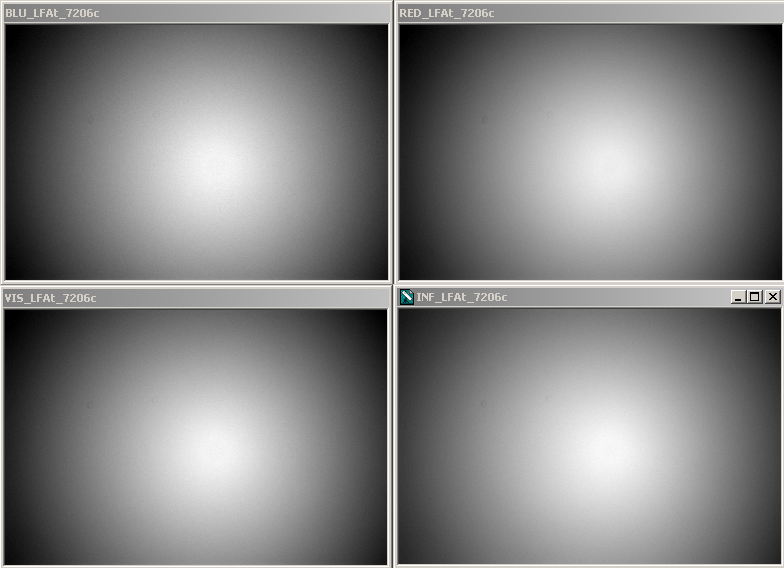

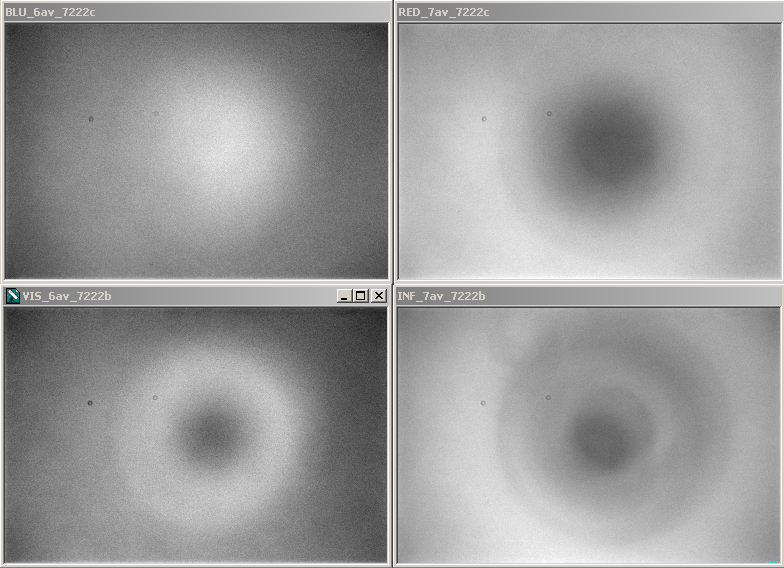

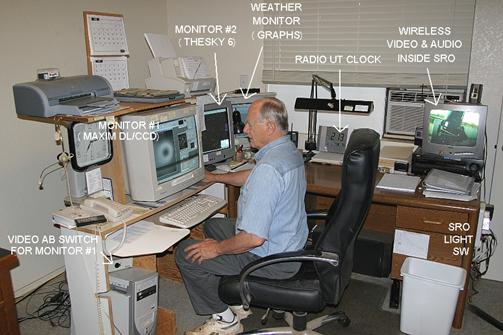

Figure 2.03. The

author is shown manning the control room at the beginning of an observing

session (making flat fields). Equipment is described in the text.

What used to be

a “master bedroom” is just the right size for everything needed in an observatory

control room. The main computer is connected to the telescope via 100-foot

underground cables in buried conduit. This computer has a video card supporting

two monitors, one for MaxIm DL and the other for TheSky/Six and other supporting

programs (labeled “Monitor #2” in the above figure).

Another computer

is dedicated to running the Davis Weather System program that downloads

readings from the data logger and displays then as graphs on its own monitor.

The Davis Weather System also has a real-time display panel; I find this

useful for quick readings of wind speed, wind direction, temperature and

dew point temperature when recording outside conditions in the observing

log.

A radio controlled

UT clock is synchronized with WWVB radio time signals every night. When

accurate time-tagging of images is important I visually compare the radio

controlled clock with the main computer’s clock, which is synchronized using

internet queries by a program (AtomTimePro) at 3 hour intervals.

Above Monitor #1

is a flat bed scanner with a small blanket. This is where the cat sleeps,

and occasionally wakes, stretches, and reminds me about observing strategies.

On the desk (behind

my chair) is another monitor for display of a wireless video sensor in

the observatory. It shows a view of the telescope when a light is turned

on by a switch (right side of desk). It also has an audio signal that allows

me to hear the telescope drive motors, the sound of the wind as well as

barking coyotes. (My two dogs observe with me, on the floor, and they get

excited whenever coyote sounds come over the speaker.)

Below the wireless

video display monitor is something found in practically every observatory:

a “hi fi” for observing music. Since my area is remote, with no FM radio

signals, I have a satellite radio (Sirius) receiver with an antenna on the

roof and channel selector next to the wireless monitor.

Figure 2.04. Another

view of control room.

Sometimes I have

to take flat frames while a favorite program is on TV (e.g., “60 Minutes”

seems to be the usual one), so I have a second TV on a desk to my left

(Fig. 2.04). The remote control for it sits on a headphone switch box (next

to the phone). It displays a satellite TV signal that comes from a receiver

in the living room.

At the left end

of the table in Fig. 2.04 is a secondary computer used to display IR satellite

image loops that show when clouds are present. It also offloads computing

tasks from the main computer (such as e-mail notices of GRB detections)

to minimize the main computer’s competition for resources. This assures

that the AO-7 tip/tilt image stabilizer is running as fast as possible.

The secondary computer has a LAN connection with the primary computer, which

allows downloading images from the main computer for off-line image analysis

without interfering with the main computer’s resources.

On top of the main

computer (below table, to left) is an AB switch for sending the main monitor’s

video signal to another monitor in my living room. This allows me to “keep

track of tracking” from my living room chair, while reading or watching

TV. The remote monitor in the living room is on a swivel that allows me

to keep track of it from my outdoor patio chair. Comfort is important when

a lot of hours are spent with this all-consuming hobby.

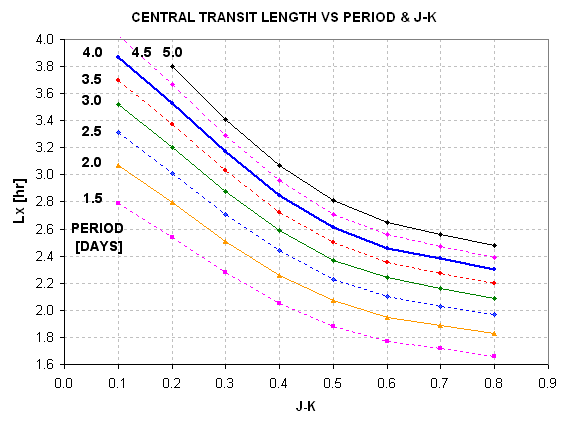

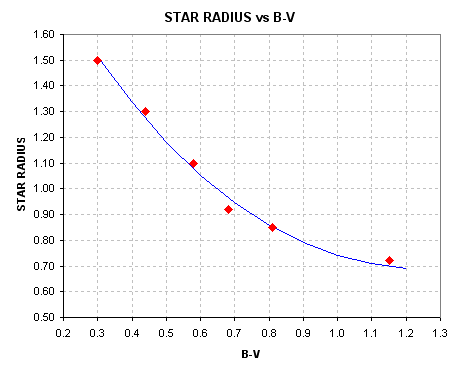

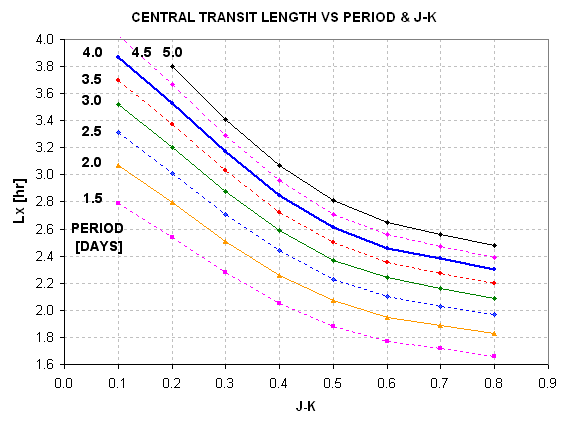

Charts are taped

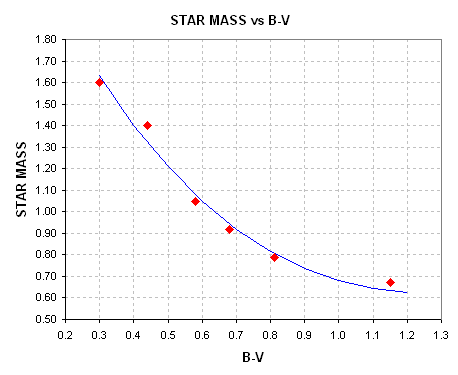

to every useful area. On one printer is a graph for converting J-K to B-V

star colors. On the side of the main monitor is a list of currently interesting

exoplanet candidates, with current information from other XO Project observers.

Charts are readily visible for estimating limiting magnitude, simplified

magnitude equation constants, and a quick way to predict maximum transit

length from an exoplanet’s star color and period (same as Fig. B.01). Post-its

are used to remind me of handy magnitude equations, site coordinates, local

to UT time conversion and nominal zenith extinction values.

─────────────────────────────────

Chapter

3

Exoplanet

Choices

─────────────────────────────────

Exoplanets can

be thought of as belonging to three categories:

1) bright transiting exoplanets, BTEs (15 known, as of July, 2007)

2) faint transiting exoplanets, FTEs (8 known, as of July, 2007)

3) exoplanets not known to undergo transits, NTEs (225 known)

Those in the first

category are by far the most important. This is because transits of “bright

transiting exoplanets” (BTEs) allow investigations to be made of the exoplanet’s

atmospheric composition and temperature. Atmospheric composition is investigated

using large, professional telescopes with sensitive spectrographs. Atmospheric

temperature is inferred from thermal infrared brightness changes as the

exoplanet is occulted by the star. These investigations can only be done

with bright (nearby) exoplanets. In addition to permitting atmospheric studies,

the BTEs permit a determination to be made of their size. Since the exoplanet’s

mass is known from radial velocity measurements (with professional telescopes)

the plant’s average density can be derived. The size and average density

allow theoreticians to construct models for the planet’s density versus

radius, which lead to speculations about the presence of a rocky core.

All of these measurements and models can be used to speculate on the formation

and evolution of other solar systems. This, in turn, can influence speculation

on the question of “life in the universe.” The rate of discovery of BTEs,

shown on the next page, is growing exponentially. Therefore, projects for

BTEs that are described in this chapter can be done on a fast-growing list

of objects.

The “faint transiting

exoplanets” (FTEs) can’t be studied for atmospheric composition and temperature,

but they do allow for the determination of exoplanet size and density since

transit depth can be measured. Most FTEs are near the galactic plane, near

the center, and this makes them especially difficult to observe with amateur

telescopes. Although hardware capability improves with time, for both amateurs

and professionals, I have adopted the somewhat arbitrary definition of

V-mag = 13 for the FTE/BTE boundary. At the present time most amateurs

are incapable of measuring transit properties when V-mag > 13.

The many “non-transiting

exoplanets” (NTEs) should really be described as not being known to exhibit

transits. Of the 225 on the list a statistical argument can be made that

probably 10 to 15 of them actually are transiting but observations of them

are too sparse to have seen the transits. As more amateurs observe NTEs

the BTEs among them will hopefully be identified. This is what happened

to GJ 436, which languished on the TransitSearch.org web site list for years

before it was observed at the right time and found to undergo 6 milli-magnitude

deep transits by a team of amateur observers (Gillon et al, 2007). This underscores

the potential value of NTEs for the amateur observer.

For those NTEs

that are truly NTE, which is probably 95% of them, since we do not know the

inclination of the exoplanet’s orbit we have only lower-limit constraints

on its mass. Since transits have not been observed the exoplanet’s size is

unknown, which means nothing is known about the planet’s density. Atmospheric

composition and temperature can’t be determined since transits don’t occur.

Some NTEs may eventually be discovered to undergo transit, and will switch

categories.

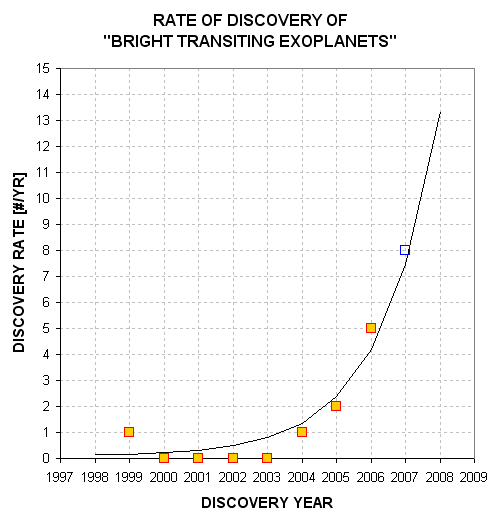

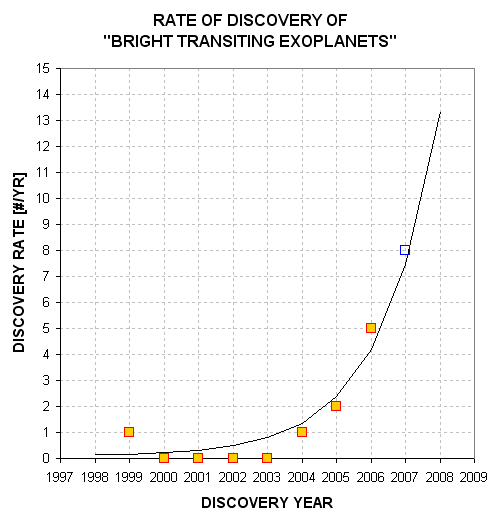

Figure 3.01. Rate

of discovery of BTEs. The curve is an exponential fit with a doubling time

of ~1.2 years. The open blue square symbol for 2007 is 8 because 4 BTEs

were announced during the first 6 months of the year.

Observing Project Types

All categories

of exoplanets are worth considering for a night’s observing session. It’s

understandable that the beginning observer will want to start by observing

a few “easy” transits of BTEs. Once the excitement of this has worn off,

however, there may be an interest in other observing projects related to

exoplanet transits.

One of my favorite

projects is to monitor known BTEs “out-of-transit” (OOT).

If no other exoplanets are present in the BTE’s solar system then the observed

light curve will be a very uninteresting plot with constant brightness

for the entire observing session. However, if another exoplanet exists

in the BTE’s solar system its orbit is likely to be in the same plane as

the known BTE, and it may produce its own transits on a different schedule

from the BTE. Since the known BTE was based on a data base of wide field

survey camera observations the transits produced by the BTE will be the

easiest to detect. Therefore, an observer searching for a second exoplanet

in a BTE solar system should be prepared for a more difficult to detect

transit. The second exoplanet’s transit depth will probably be much shallower,

and it could either last longer or be shorter, and it will come at times

that differ from the BTE transit.

Before selecting

an exoplanet to observe extensively in the OOT mode, check its “impact

parameter.” This is the ratio “transit chord’s closeness to star center”

divided by star radius. If the impact parameter is close to one then it’s

a close to grazing transit; this means that any outer planets in that system

would not transit. An impact parameter of zero corresponds to a transit

that goes through the star’s center; this means that all other planets in

the system are likely to transit. As you may have guessed, BTEs have impact

parameter values ~0.4, typically. This means that exoplanets in orbits twice

the size of the known exoplaent are likely to produce transits. Given that

a planetary system exhibits orbital periods that are proportional to orbital

radius raised to the 1.5 power, a second exoplanet in an orbit that is twice

the size of a hot Jupiter will have a period of 2.8 times that of the hot

Jupiter.

There’s a variant

of the OOT observing project type, which could be called “looking

for Trojans.” This project is based on the presence of Trojan asteroids

in our solar system. Jupiter is accompanied by swarms of asteroids in approximately

the same orbit as Jupiter but preceding and following by 60 degrees of

orbital position. These locations are gravitationally stable and are called

Lagrangian points, L4 and L5. There are about 1100 Trojans and none of

them are large (exceeding 370 km). If they were lumped together in one

object it would have a diameter ~1% that of Jupiter. In solar systems with

a Jupiter-sized planet orbiting close to its star, the so-called “hot Jupiter”

that most BTEs resemble, the BTE would have to be accompanied by a much larger

Trojan companion to produce observable transits. These larger Trojan companions

cannot be ruled-out by present theories for solar system formation and evolution,

so they are worth an amateur’s attention as a special project. The search

strategy is straight-forward: simply observe at times that are 1/6 of a BTE

period before and after the BTE’s scheduled transit. In this chapter I’ll

show you how to create your own schedule for Trojan transit times.

Another exoplanet

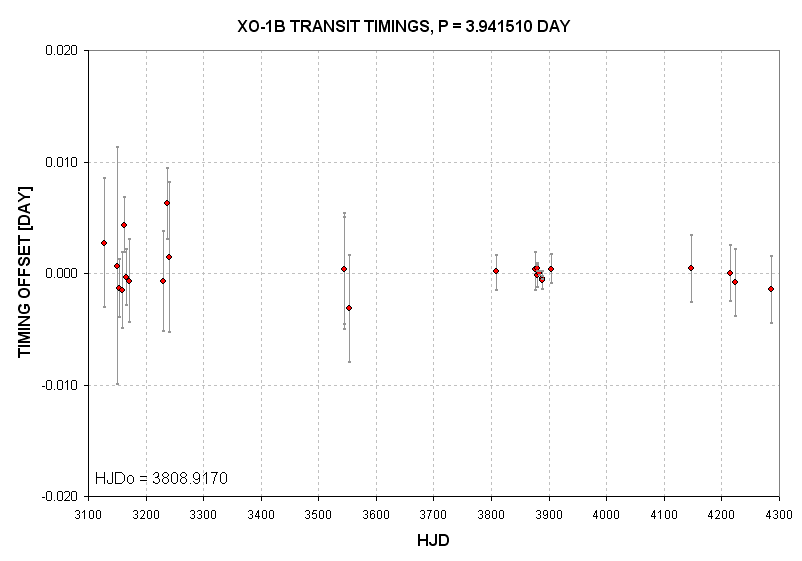

project type could be called “mid-transit timings.” The

goal is to detect anomalies in mid-transit times caused by the gravitational

influence of another planet in a resonant orbit, as described in more detail

in Chapter 16. Although this is something one person could do alone it is

more appropriate to combine mid-transit timings by many observers in a search

for anomalies. The magnitude of the anomalies can be as much as 2 or 3 minutes

and the time scale for sign reversals is on the order of a year. Only BTE

objects are suitable for this project.

A somewhat more

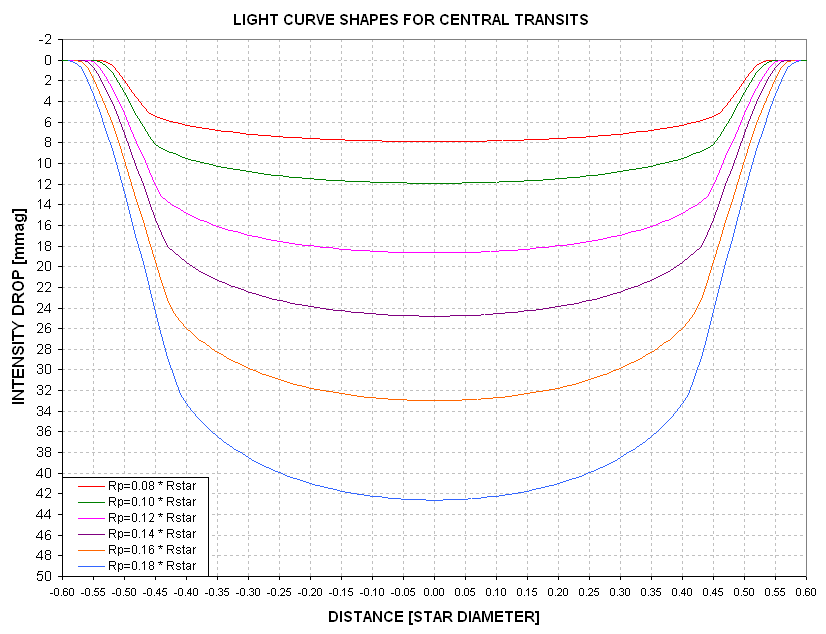

challenging observing project is to refine “transit depth versus

wavelength.” Again, this can only be done with BTEs. As the name implies,

it consists of observing a BTE at known transit times with different filters

for each event. If you have a large aperture (20 inches or larger) you could

alternate between two filters throughout an event. The goal is to further

refine the solution for the planet’s path across the star and simultaneously

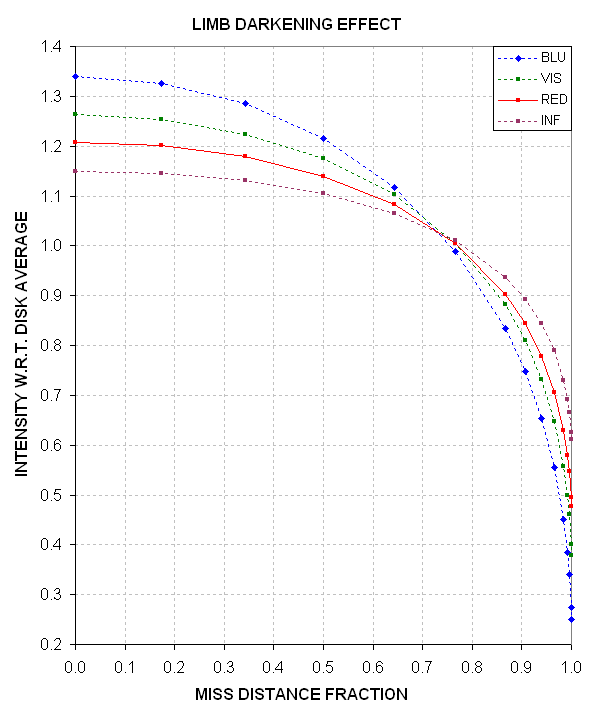

refine the star’s limb darkening function. As explained later, an exoplanet

whose path passes through star center will have a deeper depth at shorter

wavelengths whereas if the path is a chord that crosses farther than about

73% of the way to the edge at closest approach the opposite depth versus

color relationship will be found. Constraining the path’s geometry and star

limb darkening will lead to an improved estimate for planet size and this

is useful for theoreticians studying planetary system formation and evolution.

Every amateur should

consider observing nominally NTE exoplanets at times they’re

predicted to have possible transits in order to determine whether or not

they really are an NTE instead of a BTE that is “waiting” to be discovered.

As stated above, GJ 436 is one example of an exoplanet that was nominally

identified as an NTE which in fact was discovered to exhibit transits by

an amateur group that changed it to a BTE. The nominally NTE list can be

found at TransitSearch.org, which is maintained by Greg Laughlin. Times favorable

for transits, if they occur, are given on this web site, as well as likely

transit depth.

Finally, some exoplanet

observers who exhibit advanced observing skills will be invited to join

a group of amateurs supporting professionals conducting wide field camera

surveys that are designed to find exoplanet transits. So far only the XO

Project makes use of amateurs this way, in a systematic way, but other wide

field survey groups may recruit a similar team of advanced amateurs for follow-up

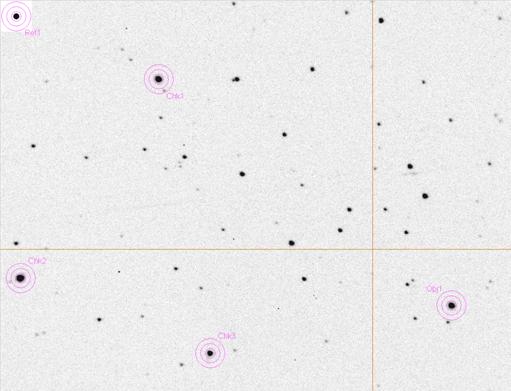

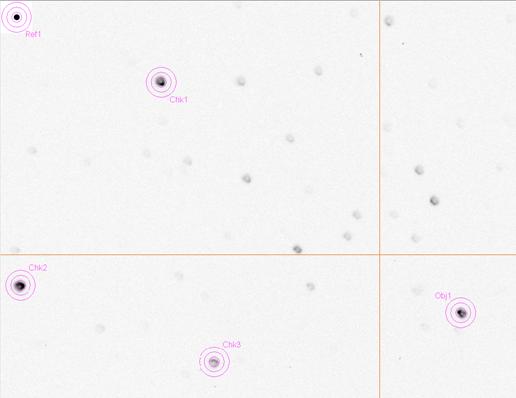

observations. The main task of these observers is to observe a star field

on a list of interesting candidates, at specific times, to identify which

star is varying at the times when the survey cameras detect small fades

from a group of stars in the camera’s low-resolution photometry aperture.

If a star is found that varies less than ~30 mmag it may be an exoplanet,

and additional observations would then be required. If the amateur light

curves are compatible with the exoplanet hypothesis a professional telescope

will be used to measure radial velocity on a few dates for the purpose of

measuring the mass of the object orbiting in front of the bright star. A

low mass for the secondary almost assures that it is an exoplanet, although

careful additional observations and model fitting will be done by the professionals

to confirm this. If you’re on the team of amateur observers contributing

to follow-up observations that lead to an exoplanet discovery, you will be

smiling for days with a secret that can’t be shared until the official announcement

is made. Appendix B is included for amateurs on a team charged with wide

field camera follow-up observations.

Whenever the night

sky promises to be clear and calm the amateur observer will have many observing

choices. I suspect that amateur exoplanet observers will eventually form

specialty groups, with some specializing in each of the following possible

areas:

OOT searches for

new exoplanets

Trojan transit

searches

BTE timing anomalies

produced by another exoplanet in resonant orbit

Transit depth versus

filter band

Search for transits

by nominal NTEs

Wide field camera

candidate follow-up

Calculating Ephemerides

for BTEs

Many of the exoplanet

observing projects listed above involve the BTEs. This section describes

how to calculate when their transits occur.

The following list

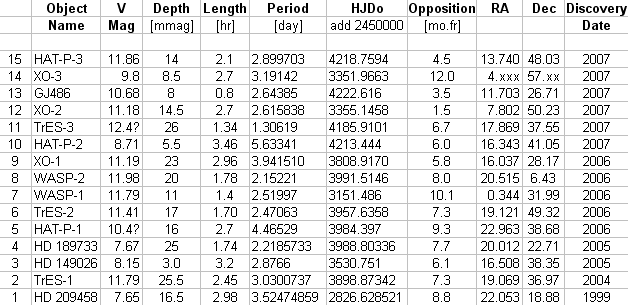

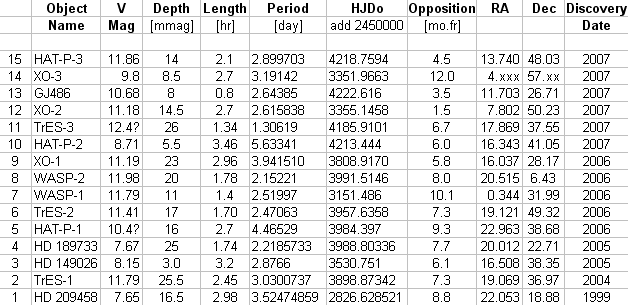

of known transiting exoplanet systems (brighter than 13th magnitude)

is complete as of mid-2007. It is presented as an example of the kind of

list that each transit observer will want to maintain, until such time as

it is maintained by an organization dedicated to serving the amateur exoplanet

observer (cf. Chapter 17’s description of my idea for an Exoplanet Transit

Archive). At the present time http://exoplanet.eu/catalog-transit.php is

an excellent web site listing transiting exoplanets (maintained by Jean Schneider).

Since it does not list transit depth, transit length, object coordinates

or other information useful for planning an observing session I maintain

a spreadsheet of transiting exoplanets brighter than 13th magnitude

(BTE_list.xls). Go to http://brucegary.net/book_EOA/xls.htm for a free download

of it. Here’s a screen capture of part of it.

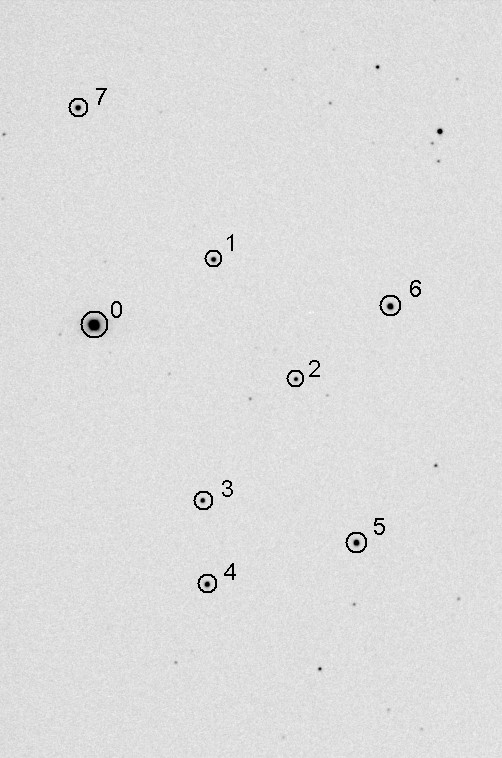

Figure 3.02. List

of bright transiting exoplanets (V-mag < 13). The “Opposition” date

is the time of year when the object transits at local midnight.

One thing to notice

about this table is that all 15 BTEs are in the northern celestial hemisphere.

This is due to a selection effect since all wide field search cameras are

in the northern hemisphere. If there had always been as many cameras in

the southern hemisphere it is fair to expect that we would now have a list

of ~30 BTEs. Based on the explosive growth rate shown in Fig. 3.01 the list

of BTEs could be in the hundreds in a few years.

Another thing to

notice about this table is that 9 of the 15 BTEs are best observed in the

summer, June through September. Maybe more BTEs have been discovered in

the summer sky because that’s when there are more stars in the night sky

(that’s when the Milky Way transits at midnight). It is unfortunate

that the northern hemisphere summer is also the time when nights are shortest,

and is therefore the least favorable time for observing a complete transit.

(Ironically, for my location in Southern Arizona the monsoon season

is from July to September, and most of these nights are overcast with a

residual of the afternoon’s thunderstorms.)

What’s the table

in Fig. 3.02 good for when planning an observing session for an upcoming

clear night? You may use this table by first noting which objects are “in

season.” The season begins approximately 3 months before “opposition” and

ends 3 months afterwards. On those dates the object transits at 6 AM and 6 PM, respectively.

An object may be observed “outside” the season, but observing intervals

will be limited (the amount will depend on site latitude and object declination).

You’ll want to

calculate when transits can be observed. This can be done using a spreadsheet

available at: http://brucegary.net/book_EOA/xls.htm. It has input areas for

the object’s HJDo, period, transit length, RA and Dec. Another input area

is for the observing site’s longitude and latitude. A range of rows with

user specified N values (number of periods since HJDo) is used to calculate

specific JD values for transits. The JD values are converted to date format

for convenience (add 34981.5 and specify your

favorite date format). The spreadsheet includes an approximate conversion

of HJD to JD (accurate to ~1/2 minute). Columns show UT times for ingress,

mid-transit and egress when the object is at an elevation higher than a

user-specified value, such as 20 degrees. One page is devoted to each of

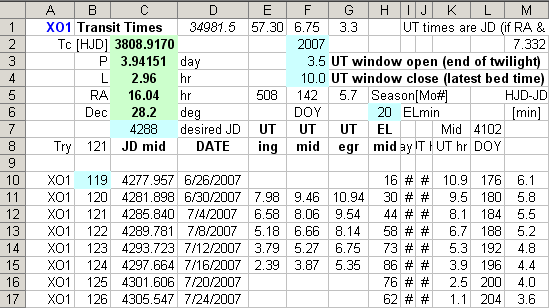

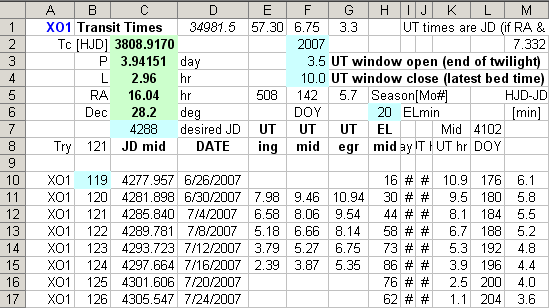

the 15 known BTEs. The following figure shows part of the display for the

XO-1 page.

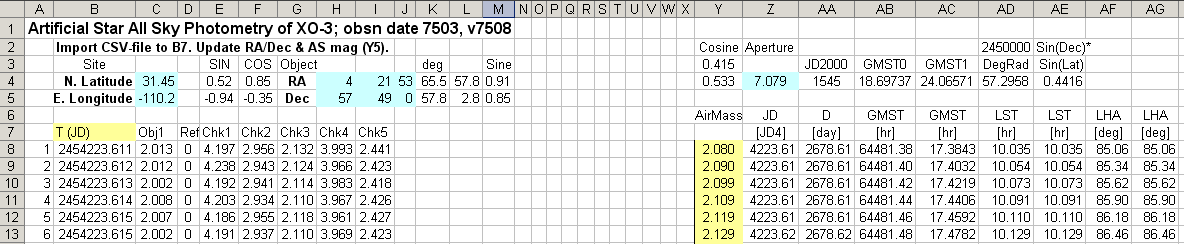

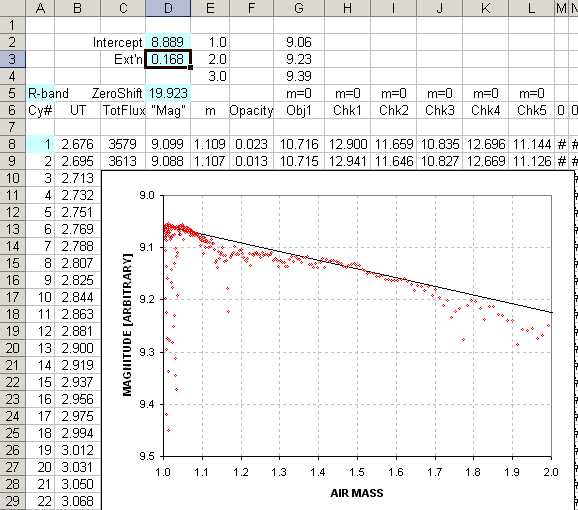

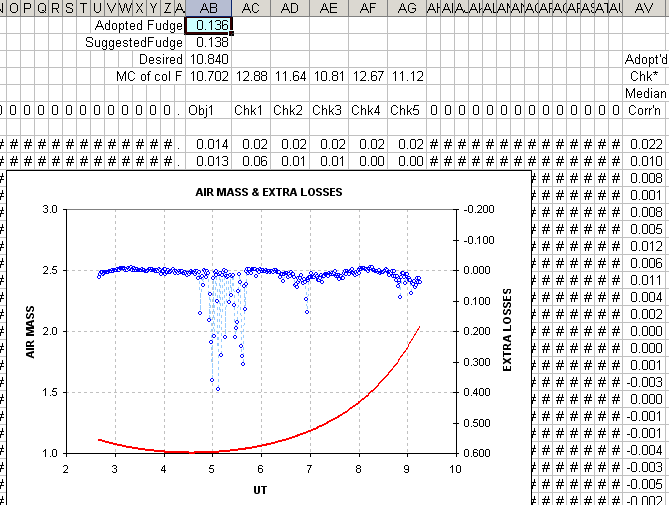

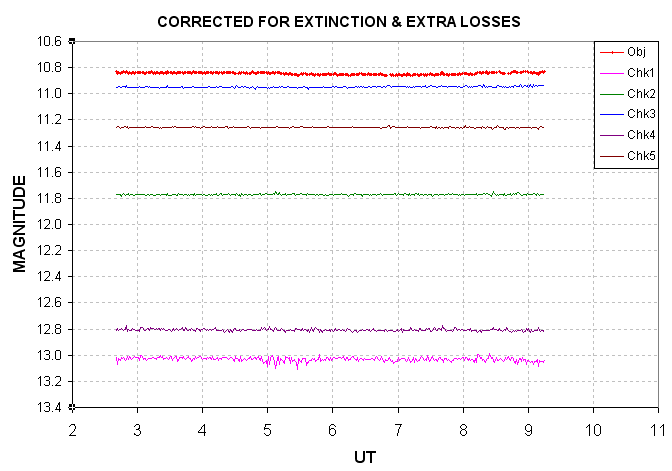

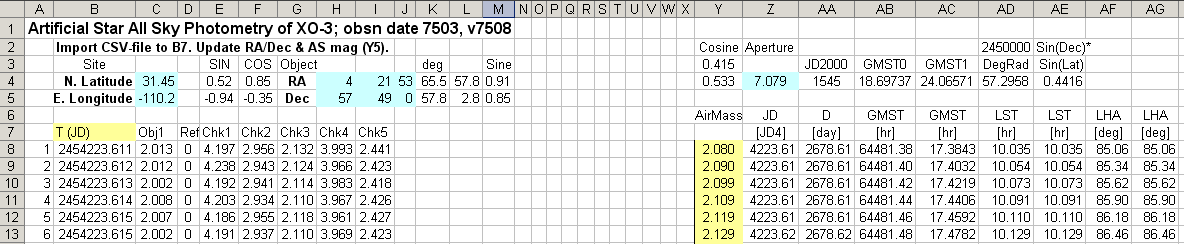

In this figure

cells C2:C6 contain BTE-specific information, such as HJDo, period, length

of transit and RA/Dec coordinates. Site coordinates are at F2:F3. The user

enters the year at G2 and the UT range that you’re willing to observe in

cells G3:G4. Cell H6 is a minimum elevation angle used as a criterion for

display of columns E-G. A 4-digit version current JD is entered in cell C7;

this is used to suggest to the user a number of periods (elapsed since HJDo)

to enter in cell B10. Cells below B10 are integer periods since HJDo that

lead to column C’s HJD transit times. Column D converts these values to UT

date. Columns H through AB (not shown) are used to calculate elevation angle

at the observer’s site (column H). Pages similar in format to this one are

present for the other BTEs, so by simply flipping through the spreadsheet

pages it is possible to determine whether any of the BTEs are observable

on a given night. The user may screen capture each page and print them for

later transfer to a monthly observing calendar. As a convenience I mark my

calendar a month ahead for all observable BTE transits.

Trojan searches can be scheduled by creating two additional spreadsheets.

One of them will require subtracting 1/6 of a BTE’s period from HJDo and

the other will have 1/6 period added to HJDo.

A fuller description

of the use of this and other spreadsheets that support this book is available

at the web site http://brucegary.net/book_EOA/xls.htm.

Figure 3.03. Sample

Excel spreadsheet showing XO-1b transit events and their “visibility” (from

my site). Columns E, F and G show UT times for transits that are above

20 degree elevation and between 3.5 and 10.0 UT. Other details are explained

in the text.

SpectraShift

Before leaving

the topic of exoplanet projects that are within the reach of amateurs I

want to describe an amateur-led project, called SpectraShift, that is designed

to detect exoplanets spectroscopically. Radial velocity requirements are

demanding since a hot Jupiter orbiting a solar mass star will impart radial

velocity excursions of only ±200 m/s if it’s in a 4-day orbit. An

amateur group led by Tom Kaye is assembling a system that is expected to

achieve 100 m/s resolution using a 44-inch aperture telescope for the brighter

BTEs. This group used a 16-inch telescope in 2000 and 2004 to observe Tau

Boo and they are credited with being the first amateurs to detect an exoplanet

using spectroscopic measurements of radial velocity.

When a wide

field survey camera directs an amateur team to candidates for follow-up

light curve observations, and when the amateur light curves indicate that

the suspect star is indeed fading by small amounts with a flat-bottomed

shape, the professionals are often faced with long lead times for obtaining

observing time on a large telescope for spectroscopic radial velocity observations

that would confirm the secondary as being an exoplanet. When SpectraShift

becomes operational, probably in 2008 or 2009, there will be an opportunity

for them to collaborate with professional/amateur associations to obtain

the required radial velocity observations with short lead times.

Closing Thoughts for the Chapter

There are

many ways amateurs can collaborate with professionals in discovering and

studying exoplanets. Once basic skills have been “mastered” the simplest

project is to choose a BTE and observe it every clear night regardless of

when it is expected to undergo transit (OOT observing). This will provide

a wealth of data for assessing systematic errors affecting light curve behavior

with air mass and hour angle. It may also turn up an unexpected secondary

transit produced by a second exoplanet in the far off solar system. This

observing strategy could also produce the discovery of a Trojan exoplanet.

I recommend OOT observing for anyone who has the required patience and interest

in understanding their telescope system.

A slightly

more demanding project would be measuring BTE mid-transit times and adding

them to a data base of similar observations by others. Eventually a new

exoplanet in a resonant orbit will be found this way.

Measurements

of transit depth versus filter band can be useful for newly discovered

exoplanets since this information will help professionals obtain a better

solution for planet size.

Monitoring

the NTEs at favorable times will advance the goal of identifying that dozen

or so exoplanets that are providing transits that no one has detected yet.

Each person

has favored observing styles, and trying out the ones described here is

a way to find which one is your favorite. Enjoy!

─────────────────────────────────

Chapter

4

Planning

the Night

─────────────────────────────────

This chapter may

seem “tedious” to someone new to exoplanet observing. However, keep in

mind that the requirements for observing exoplanets, with 0.002 magnitude

precision, is significantly more challenging than observing variable stars,

with precision requirements that are more relaxed by a factor of 10 or

20. Any amateur who masters exoplanet observing is working at a level somewhere

between amateur and professional. Naturally more planning will be involved

for such a task.

Probably all amateurs

go through a phase of wanting to observe many objects each night. Eventually,

however, the emphasis shifts to wanting to do as good a job as possible

with just one object for an entire night’s observing. Exoplanets should

be thought of this way.

This chapter describes

ways to prepare for a night’s observing session. The specifics of what

I present are less important than the concepts of what should be thought

about ahead of time. Observers who are unafraid of floundering are invited

to begin with a total disregard of the suggestions in this chapter since

floundering “on one’s own” is a great learning experience. I encourage floundering;

that’s how I’ve learned almost everything I know. You might actually conclude

that what you learn first-hand agrees with my suggestions.

If you don’t like

floundering, then for the rest of this chapter imagine that you’re visiting

me in Southern Arizona for an instructive

observing session. Together, we’ll plan observations that illustrate decisions

that have to be made for a typical exoplanet transit. Let’s assume that

it’s 2007 May 5 and you’ve asked me to show you how to observe an exoplanet

transit, not yet chosen.

In the afternoon

we begin an “observing log.” This is an essential part of any observing

session, and starting it is the first step for planning a night’s observations.

We begin the log by noting the time for sunset. A table of sunset and sunrise

times for any observing site is maintained by the the U. S. Naval Observatory;

it can be found at: http://aa.usno.navy.mil/data/docs/RS_OneYear.html.

Moonrise and set times are also available at this site. CCD observing can

begin about 55 minutes after sunset. Sky flats are to be started at about

sunset, the exact time for taking flats depends on the filters that are

to be used, the telescope’s f-ratio, binning choice and whether a diffuser

is placed over the aperture (treated in the next chapter). Filter and binning

choices can’t be made until the target is chosen. That’s what we’ll do

next.

Choosing a Target

Since we’re going

to spend 6 or 8 hours observing, it is reasonable to spend a few minutes

evaluating the merits of various exoplanet candidates. I will assume that

you are not privy to one of those secret lists of possible exoplanet candidates

maintained by professional astronomers using wide field survey cameras.

(If you are such a member, then Appendix C was written for you.)

We want to observe

a known transiting exoplanet

system, which means we’ll be checking the “bright transiting exoplanet”

(BTE) list. If none are transiting tonight then we’ll have to settle for

an exoplanet system where transits might

be occurring. This “might” category includes exoplanets currently on the

NTE list (TransitSearch.org), BTE Trojan searches and undiscovered second

exoplanets in resonant orbits that produce shallow transits at unknown times.

These categories are described in the previous chapter. Since you’ve asked

to observe a transit we’ll be consulting a spreadsheet that I maintain for

my site that includes a spreadsheet page for each of the 15 known BTE objects.

Each page has a list of transit times with about a month’s worth of transits;

as I flip through them we look for transit times for May, 2007. If there

aren’t any transits by the BTEs then a “might” category observation will

have been considered. We’re fortunate, though, since we note that XO-1 is

scheduled to transit tonight. Ingress is at 8:37 PM and egress is

at 11:34 PM. At mid-transit

XO-1 will be at an elevation of 48 degrees. The sky is clear, the wind

is calm, and one of the easiest exoplanets is transiting tonight. Life

is good!

Choosing a Filter

XO-1’s brightness

is V-mag = 11.2 and the transit depth is ~23 mmag. From past experience

using my 14-inch telescope I know that the star’s brightness and the transit’s

large depth will make this an easy observation. SNR won’t be a problem,

so we aren’t restricted to the use of filters that allow lots of photons

to come through, such as clear or a blue-blocking filter (BB-filter). All

filter choices are possible.

As an aside, what

would our options be if the exoplanet had a shallow depth, or its star

was faint? A clear filter would deliver the most light and produce the

highest SNR. However, a BB-filter might be better since it excludes blue

light (~7%), which means it would reduce the size of one of the most troublesome

light curve systematic errors: baseline “curvature” that’s symmetric about

transit, caused by reference stars with a different color than the exoplanet

star (more details in Chapter 14, “Star Colors”). For small depths this

curvature can be troublesome. Observers with 10-inch (or smaller) telescopes

should consider using the BB-filter often. Observers with 20-inch (or larger)

apertures should rarely have to use the BB-filter. It’s the 12- and 16-inch

telescope observers who may have difficult choices for typical exoplanet

candidates with depths in the 15 to 25 mmag region.

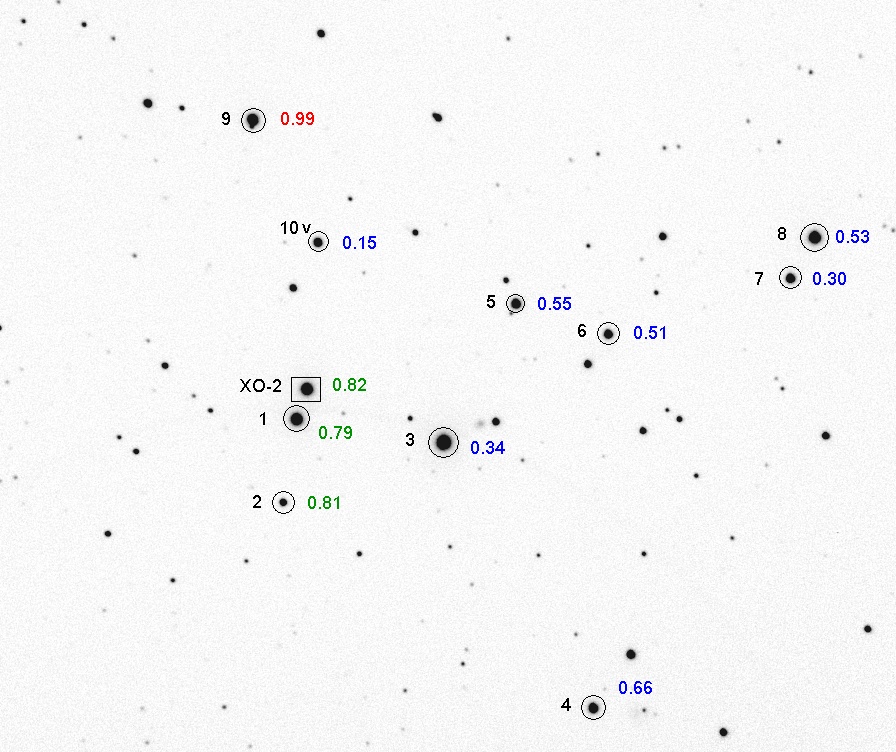

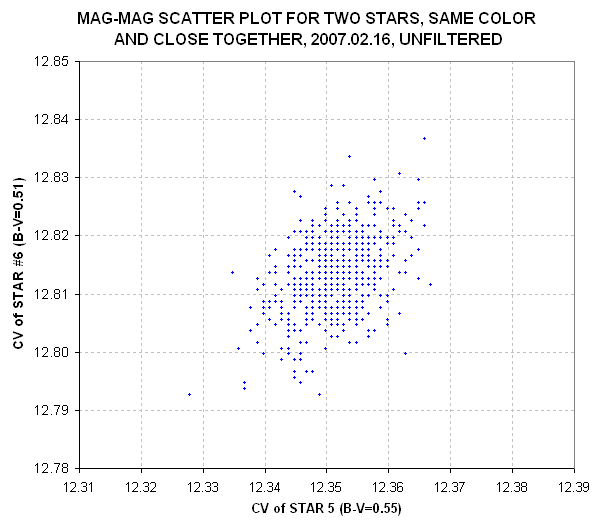

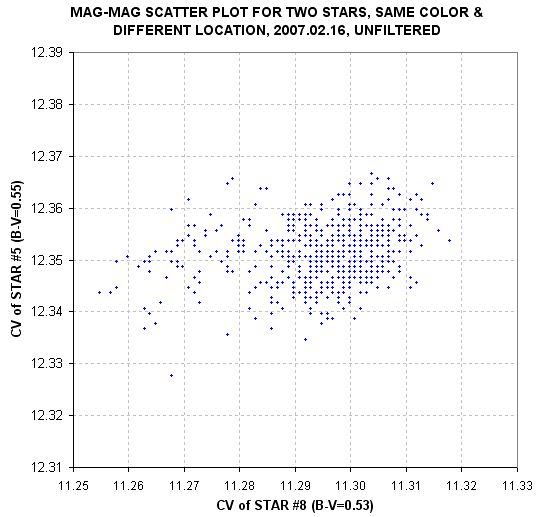

Since the XO-1

transit is an easy one we are free to review other filter choice considerations,

such as “science needs.” If there are no B-band observations for a known

exoplanet, then a B-band observation could be valuable. There are occasions

when C-filter (clear filter) observing is acceptable. XO-2 is a good example

since it has a binary companion 31 ”arc away that has the same color and

brightness as XO-2. Because the two stars have the same color there is almost

no penalty for observing unfiltered; I’m referring to the “star color extinction

effect” that causes baselines to be curved symmetrically about transit. This

is explained in Chapter 14, so for now just accept my assertion that the

presence of reference stars having the same color as the target star (exoplanet

star) is a consideration in choosing a filter. When high air mass observing

is required I-band is a good choice (all other things being equal).

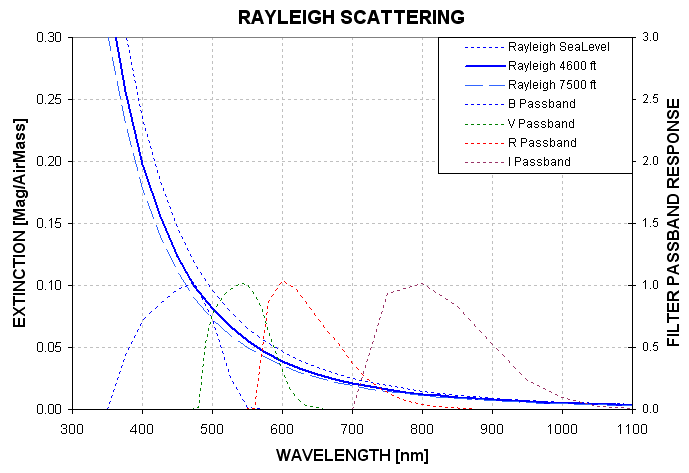

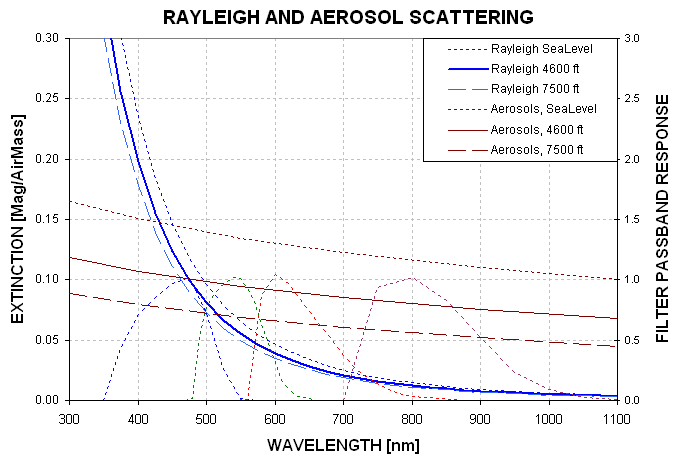

The presence of

moonlight should influence filter choice. Even though you can’t see it,

when there’s moonlight the night sky is blue. A moonlit night sky will be

just as blue as a sunlit day sky, and for the same reason (Rayleigh scattering).

If the moon will be up during a transit avoid using a B-band filter or

a clear filter. I-band observations are affected the least by moonlight.

R-band is almost as good, and it passes more light, so if SNR is going to

be important consider using an R-band filter on moonlit nights. If SNR

is likely to be very important then consider

using a BB-band filter, which at least filters out the bright sky B-band

photons. The moonless night sky is not blue, but extinction is still greatest

at B-band and smallest at I-band, so for dark skies air mass is more important

than sky color when choosing a filter. On May 5 at my site the moon rises

at 10:35 PM. I recommend using

an I-band filter for the XO-1 observations. This is tentative, however,

since other considerations are important.

Next, we run TheSky/Six

(a “planetarium program” from Software Bisque) to find out the elevation

of XO-1 during the night, and specifically during the predicted transit.

Acceptable elevations depend on filter; B-band observing will require high

elevations (e.g., EL>30 degrees) whereas I-band observing can be done

at much lower elevations (e.g., EL>15 degrees). We need to allow for acceptable

elevations for the entire transit, from ~1.5 hours before first contact to

~1.5 hours after last contact. Transits of “hot Jupiters” (large exoplanets

orbiting close to their star) have transit lengths similar to XO-1, ~3 hours.

The best observing situation is for mid-transit to occur at midnight, but this rarely

happens. We need to study XO-1’s elevation versus time for the 7 hours centered

on mid-transit in order to be sure of our filter choice.

Sunset occurs at

7:03 PM, so quality observing

could start at ~7:58 PM (lower quality

observations could start at ~7:45 PM). When quality

observing can begin XO-1 will be at 20 degrees elevation, and rising. If

observing began at 7:58 PM, ~40 minutes of

data could be obtained before ingress. That’s pretty short, since we want

1 to 1.5 hours, but it’s just enough for establishing an “out-of-transit”

baseline level. After egress there will be lots of data since XO-1 will

still be rising and it will be dark. Observations should extend to at least

an hour after egress, so let’s plan on observing 2 hours after egress to

be safe. Observations of XO-1 will therefore end at ~1:30 AM.

It is worth noting

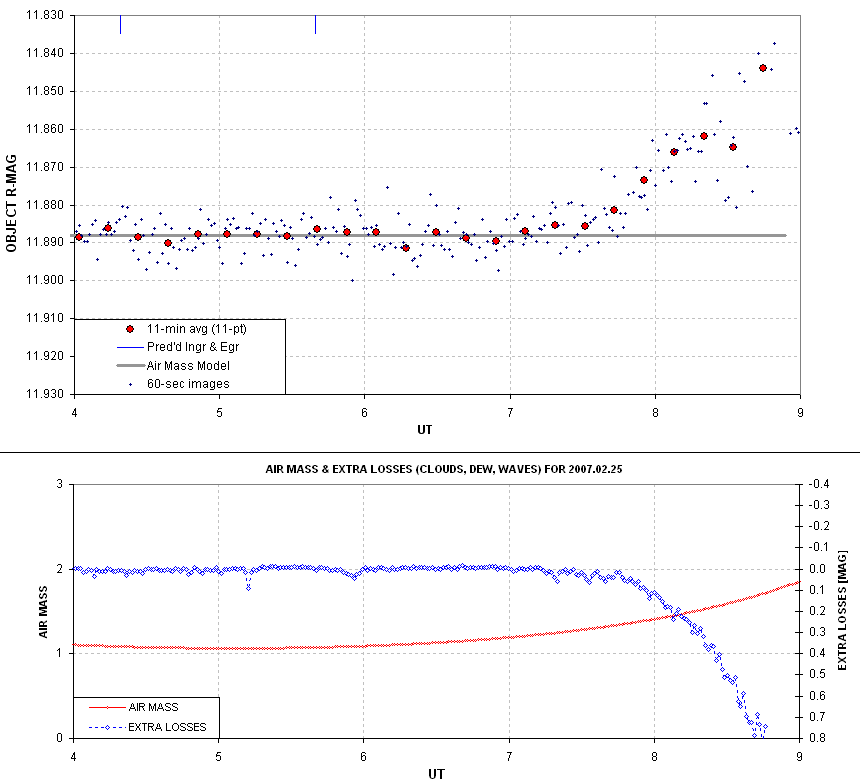

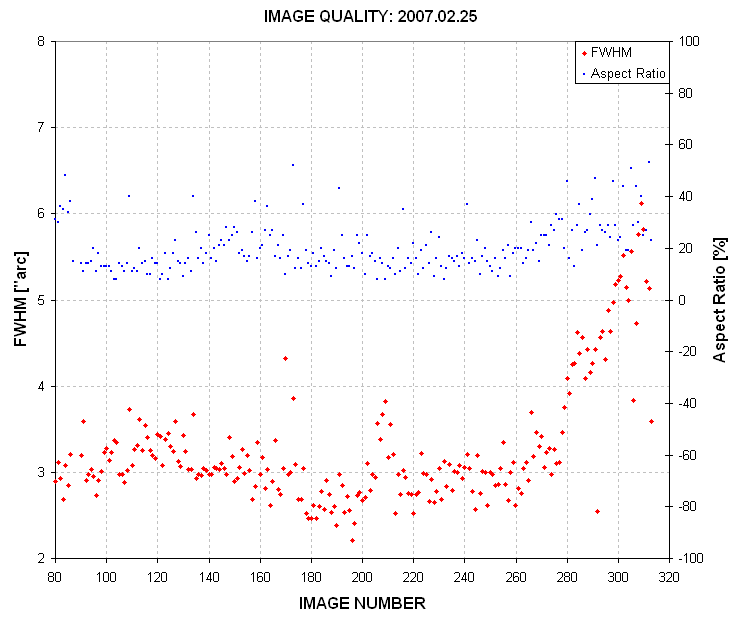

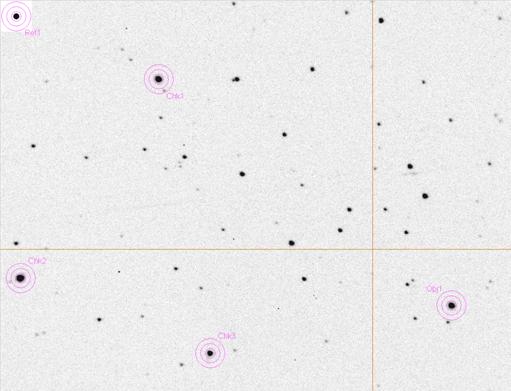

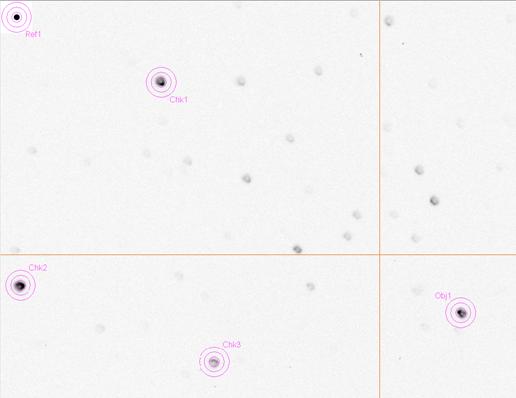

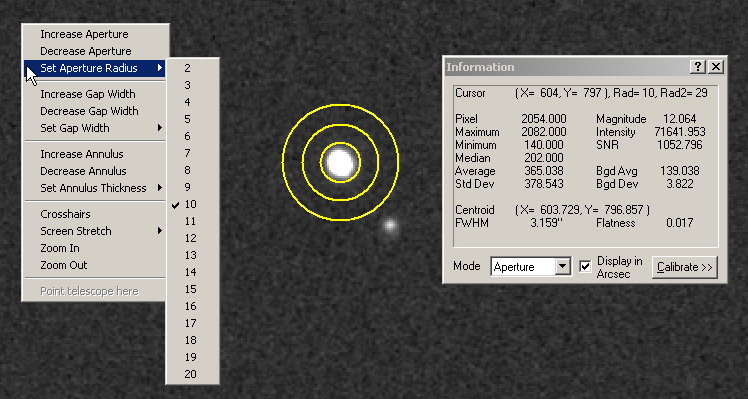

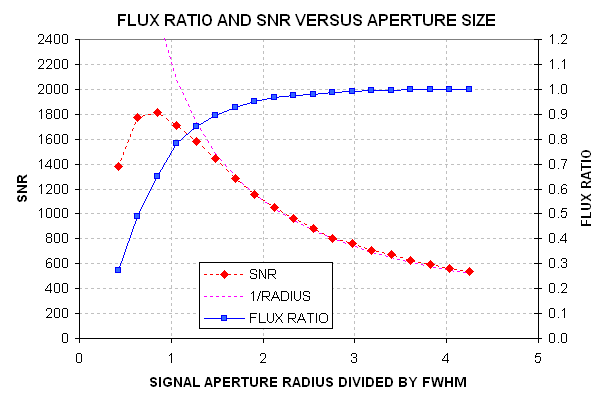

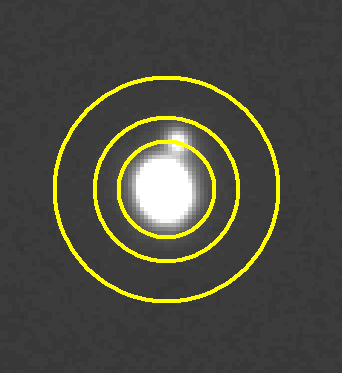

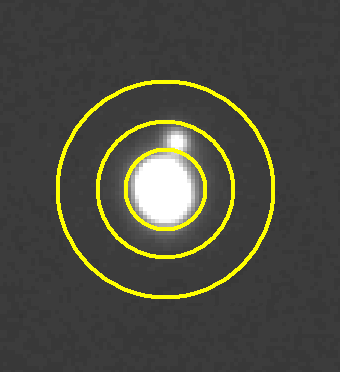

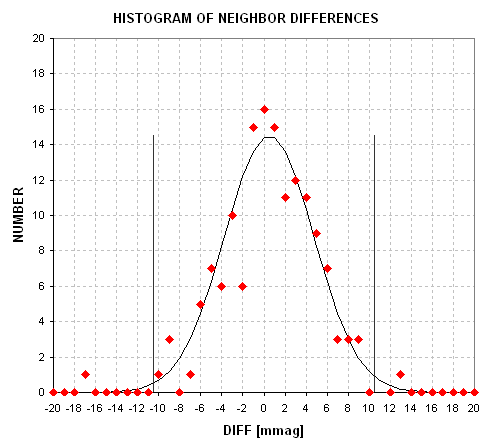

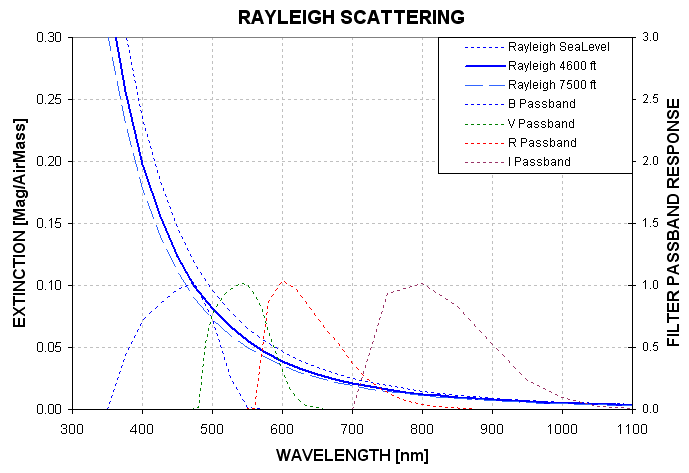

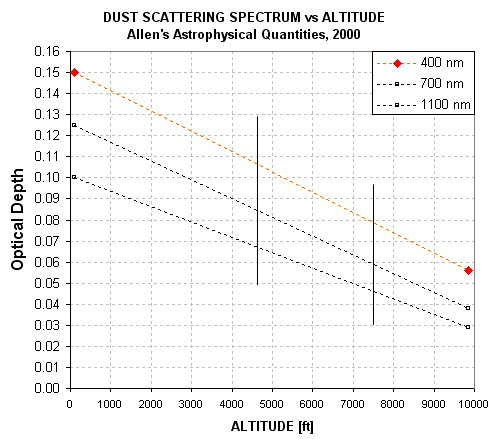

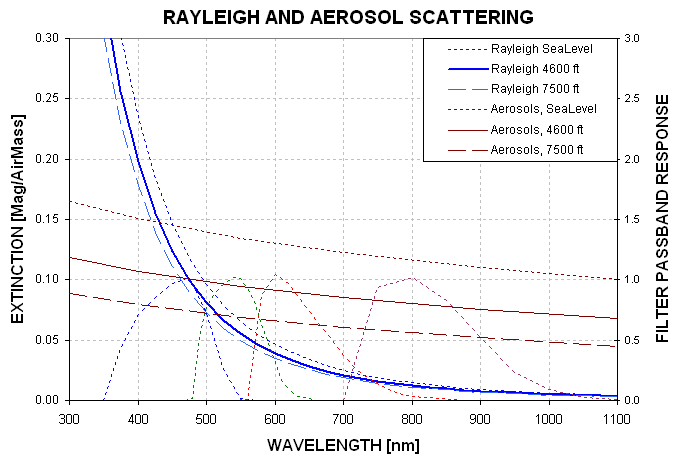

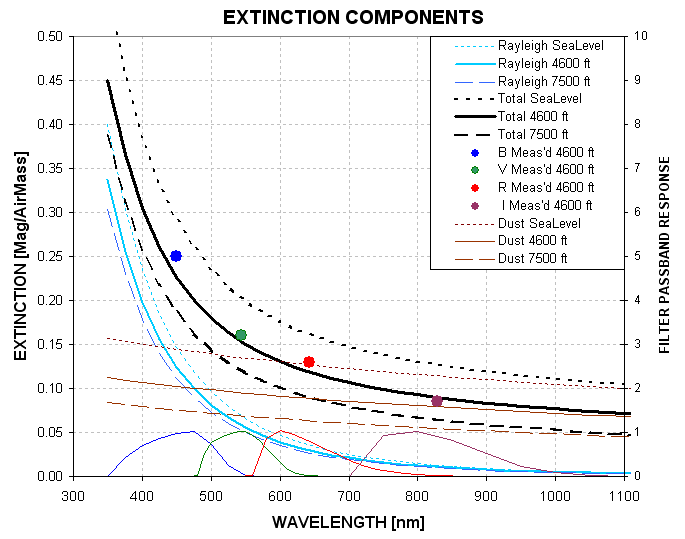

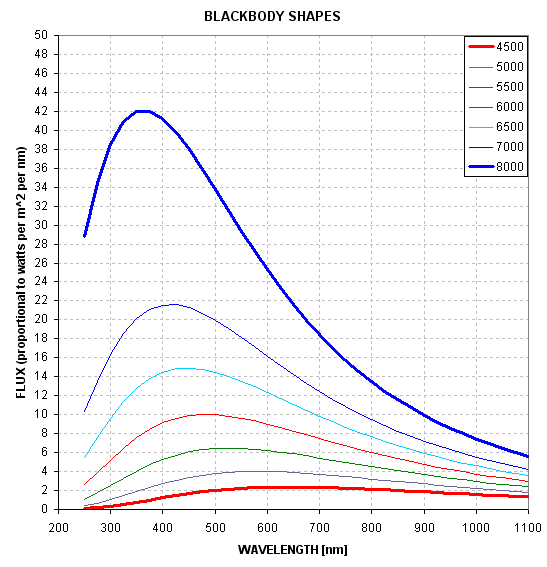

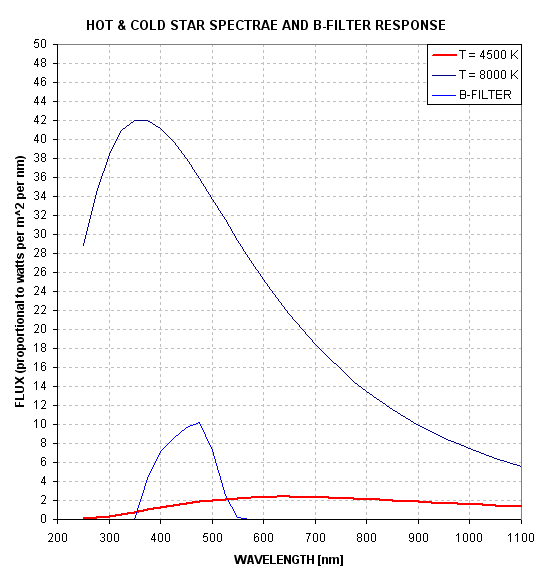

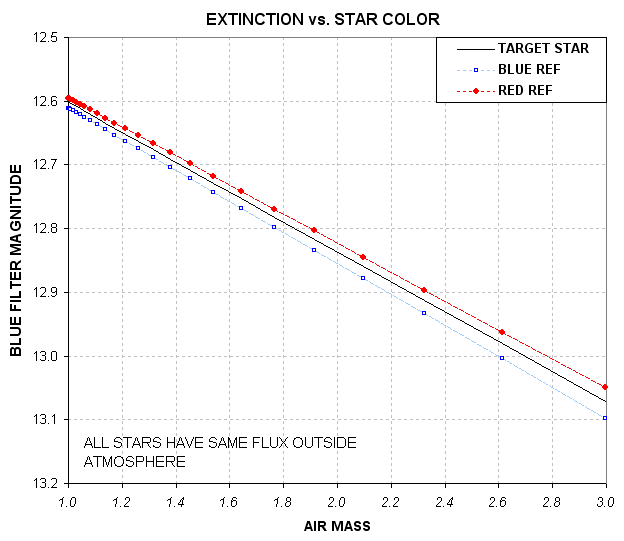

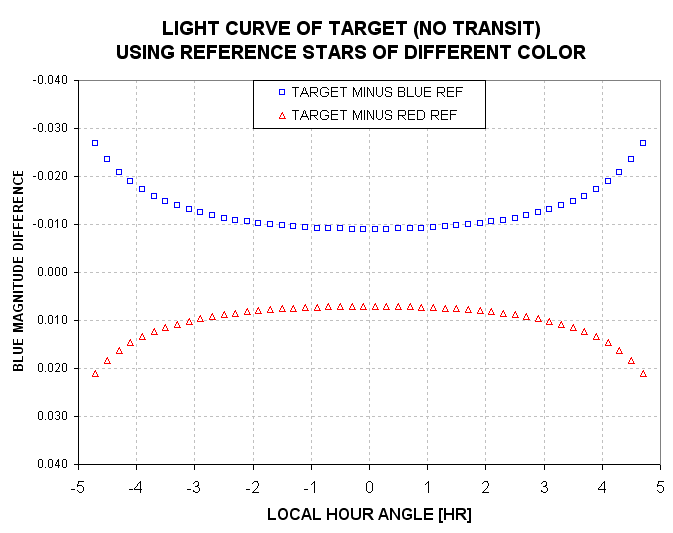

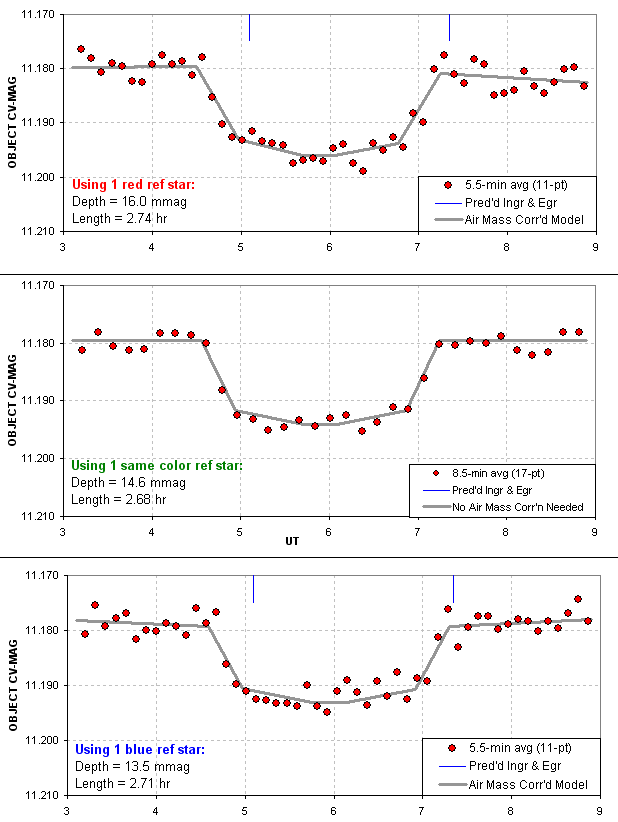

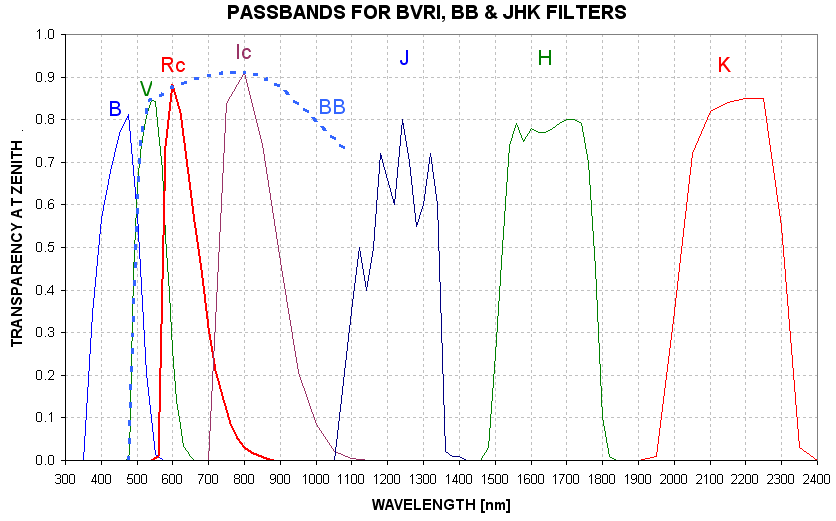

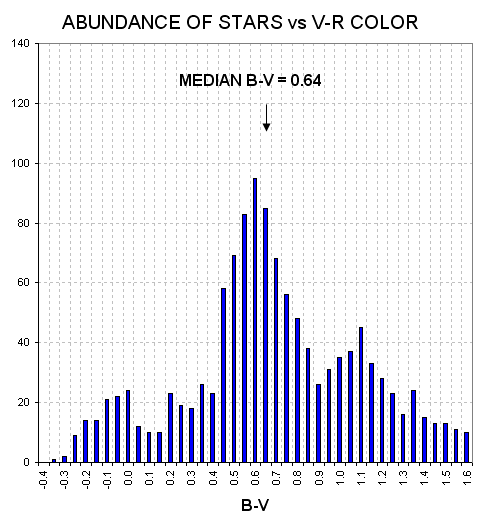

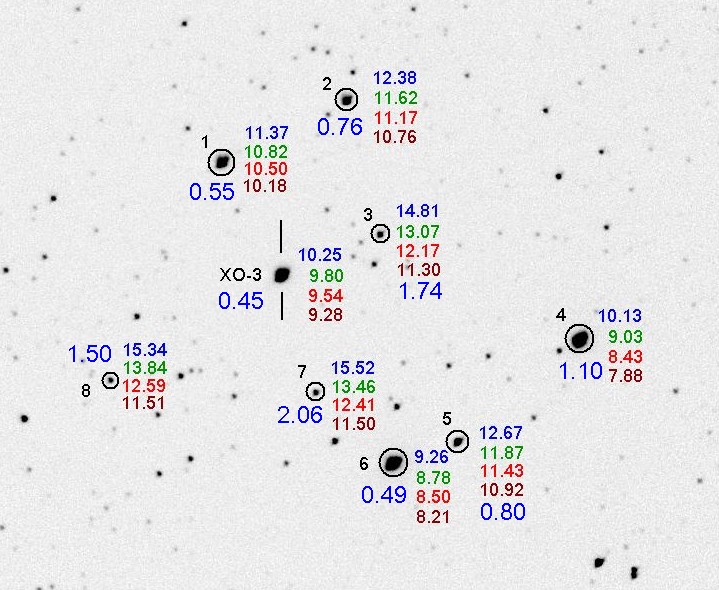

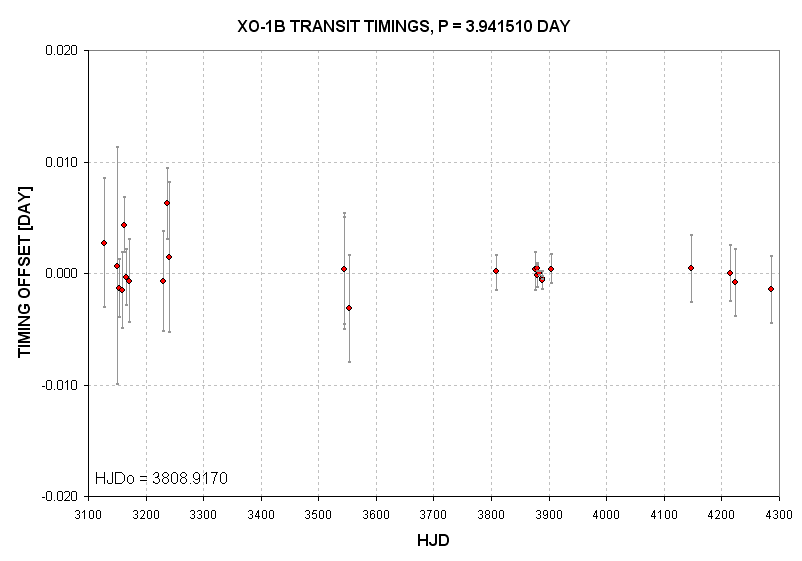

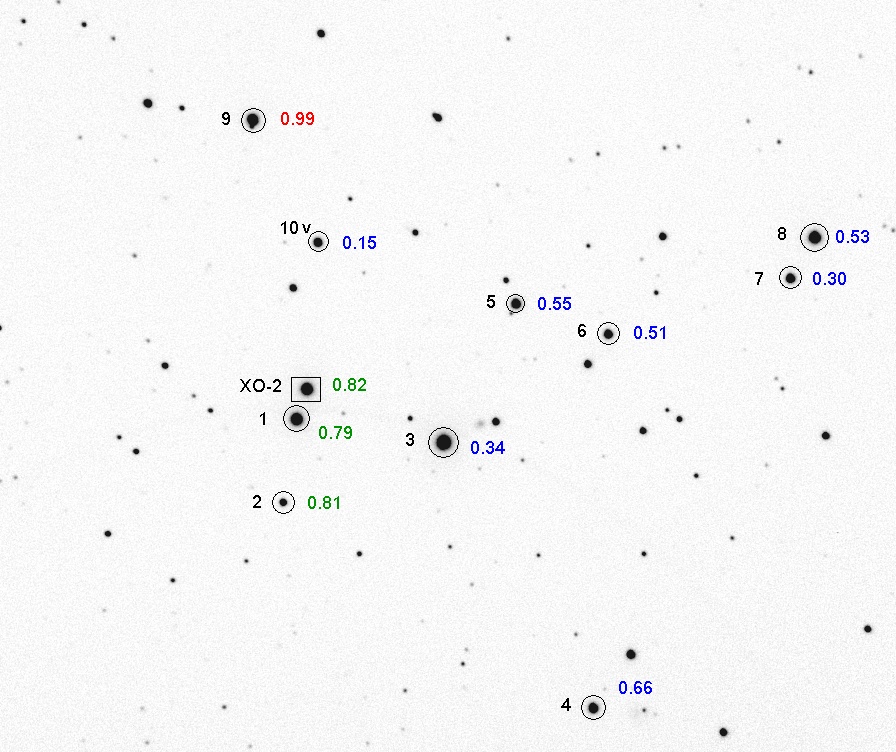

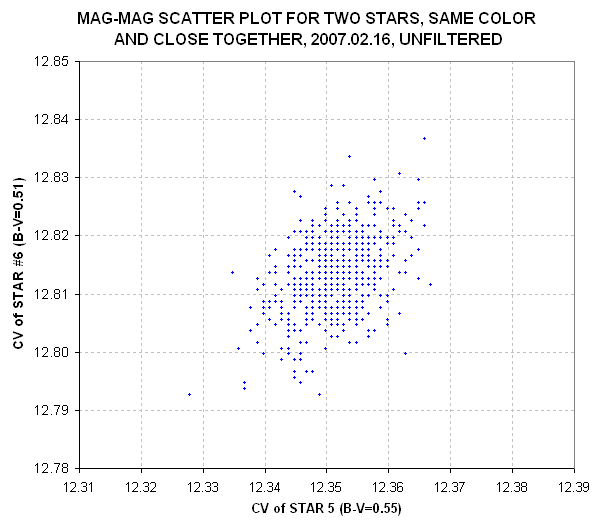

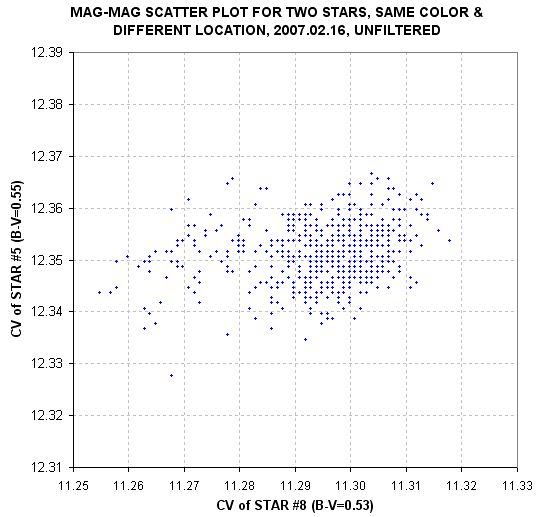

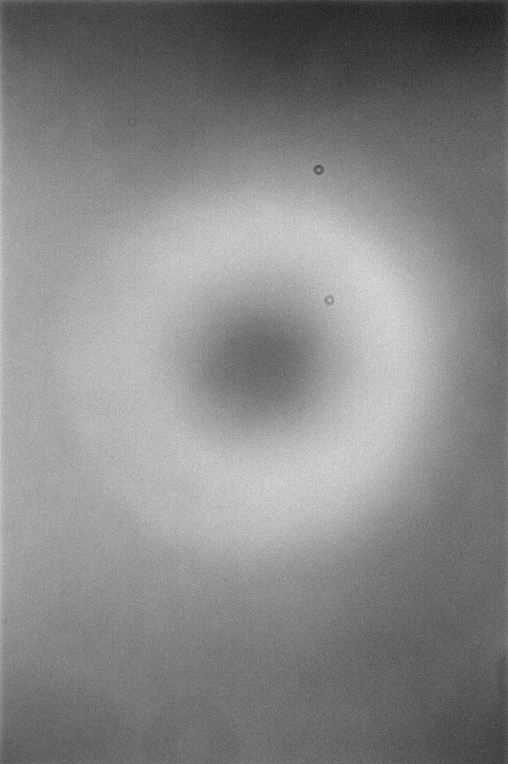

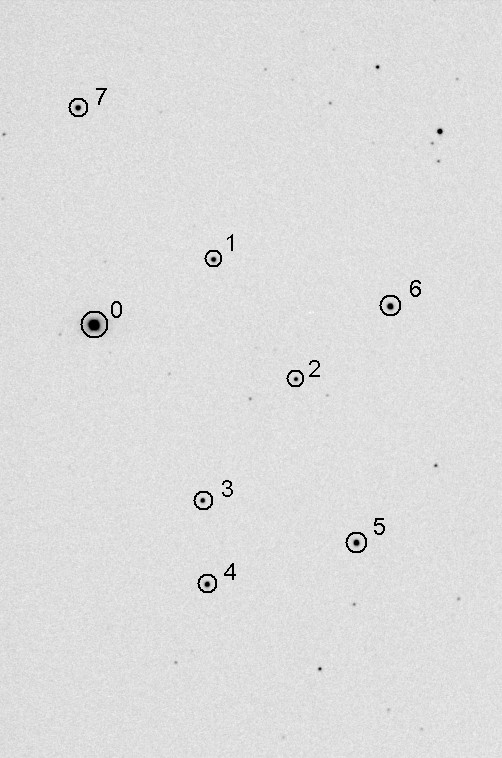

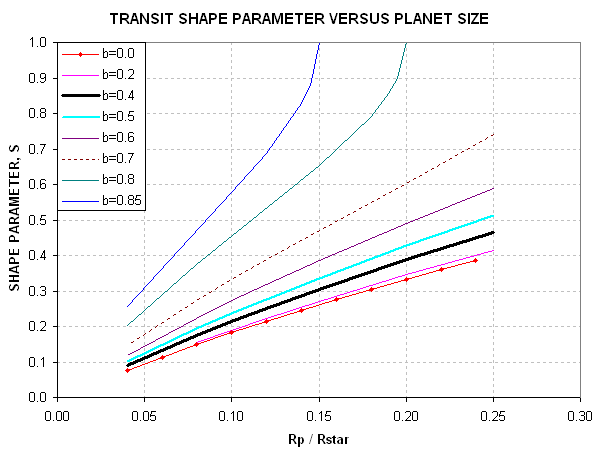

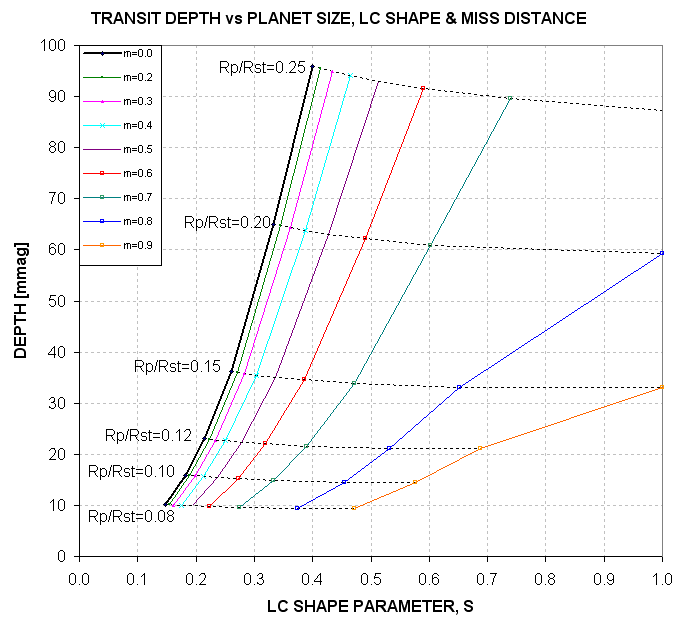

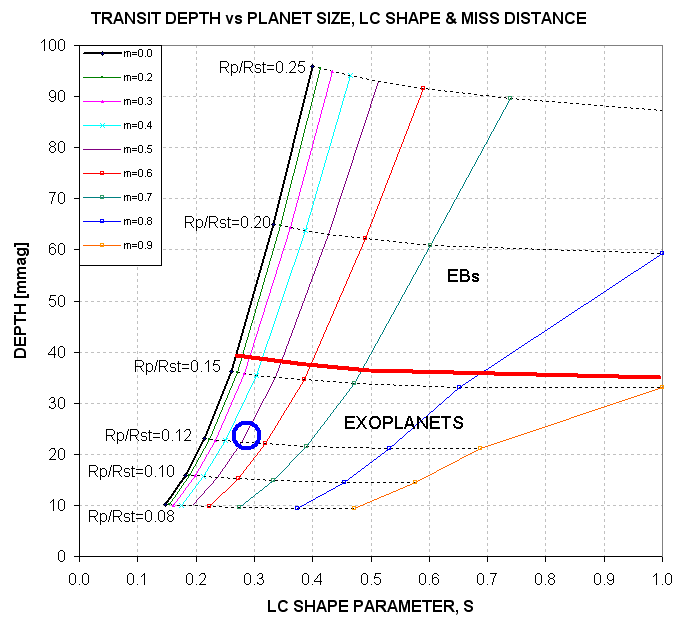

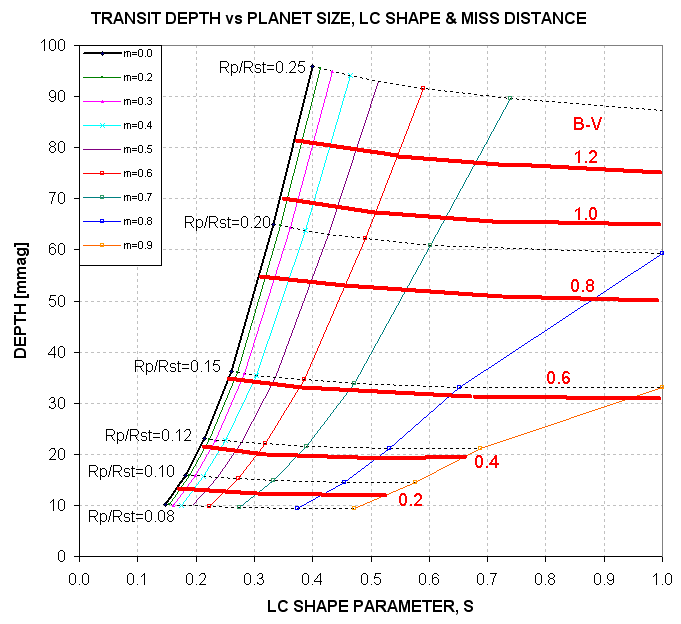

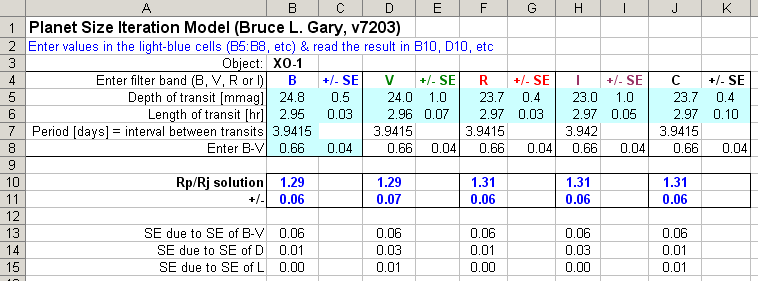

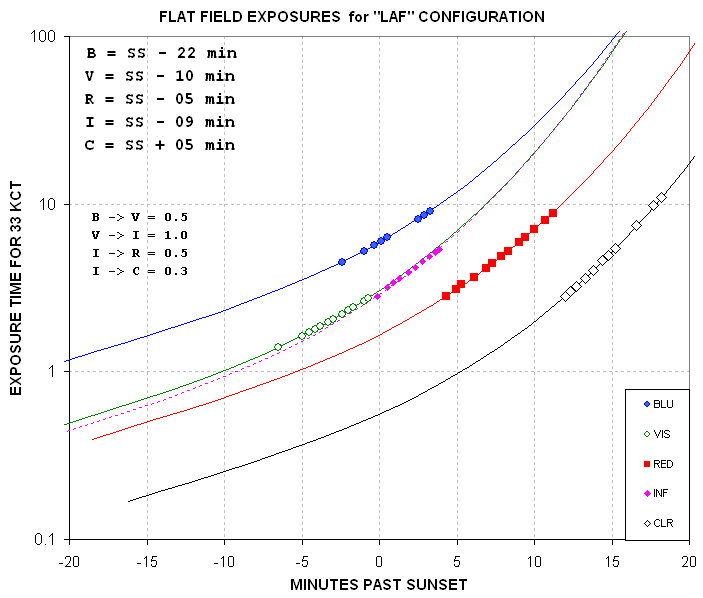

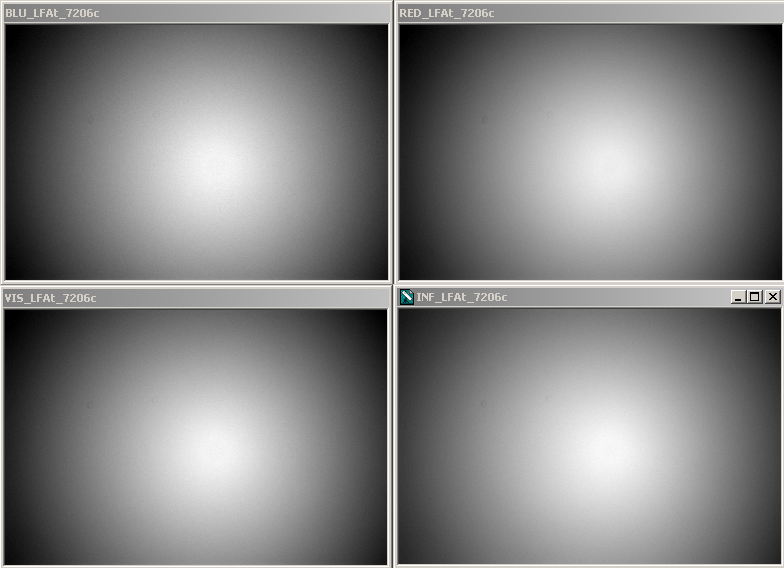

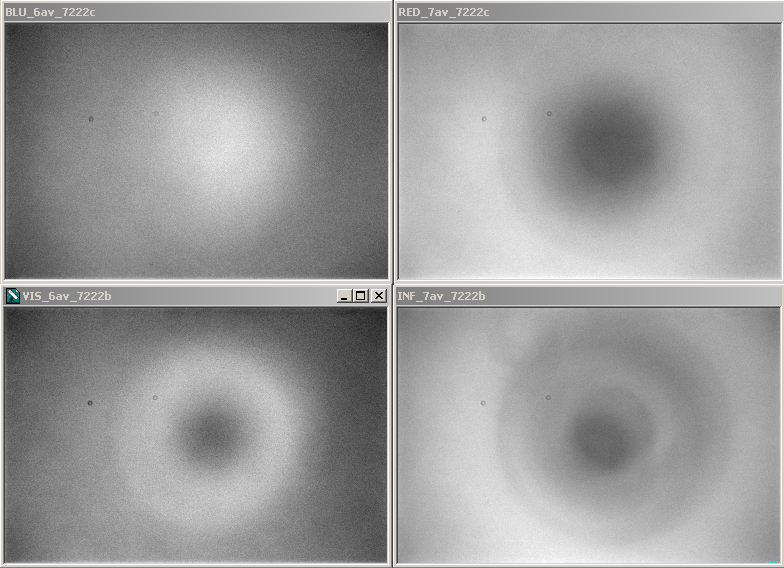

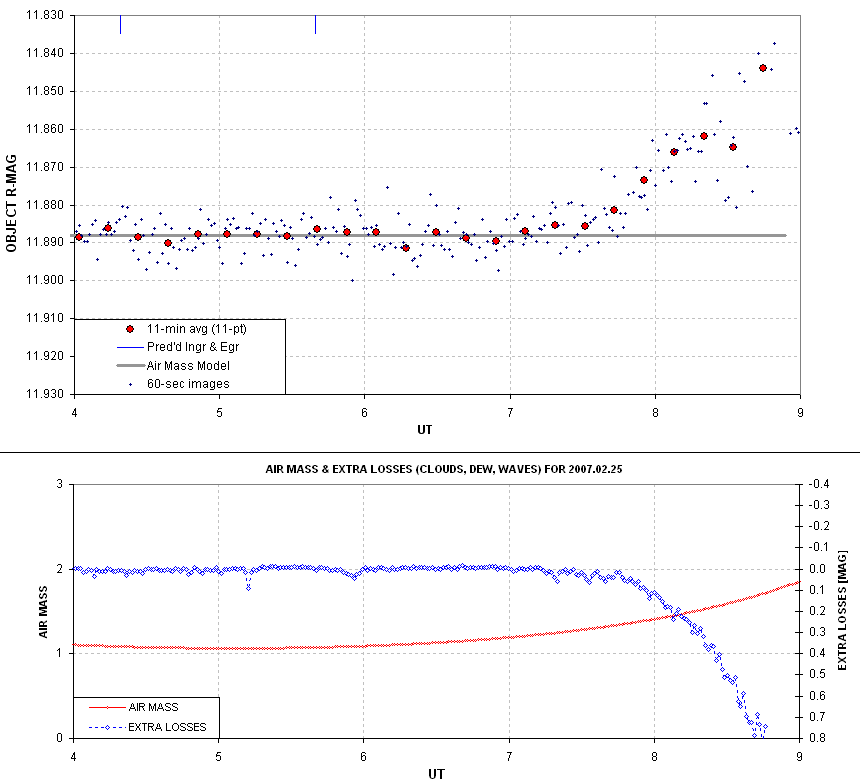

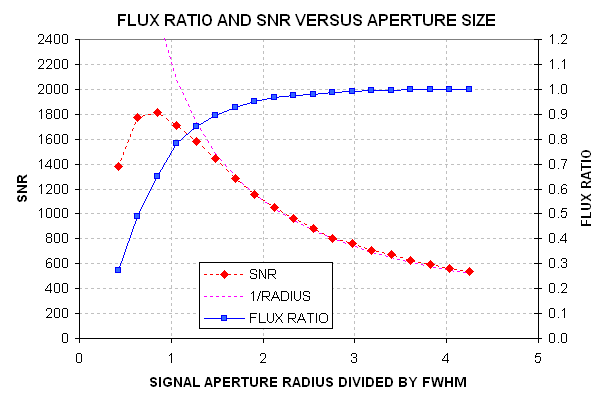

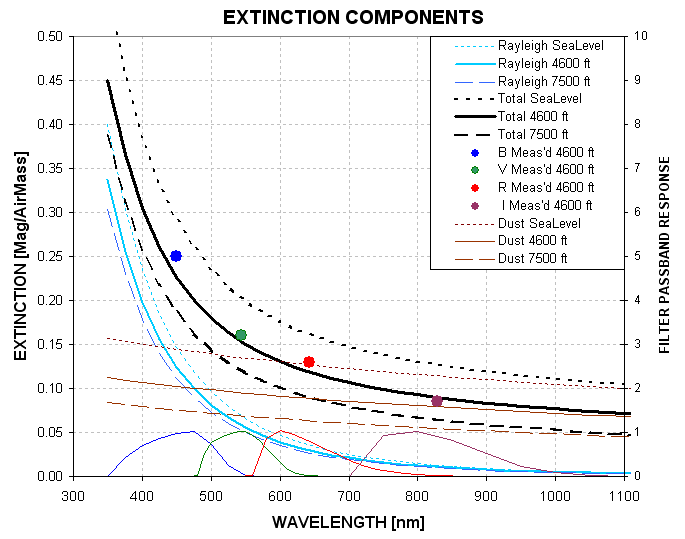

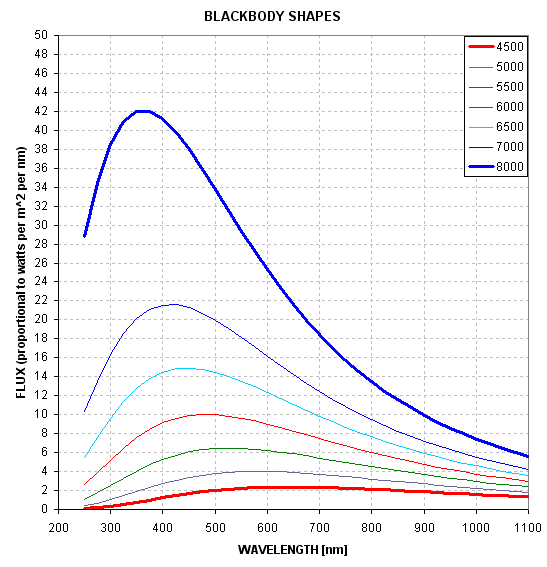

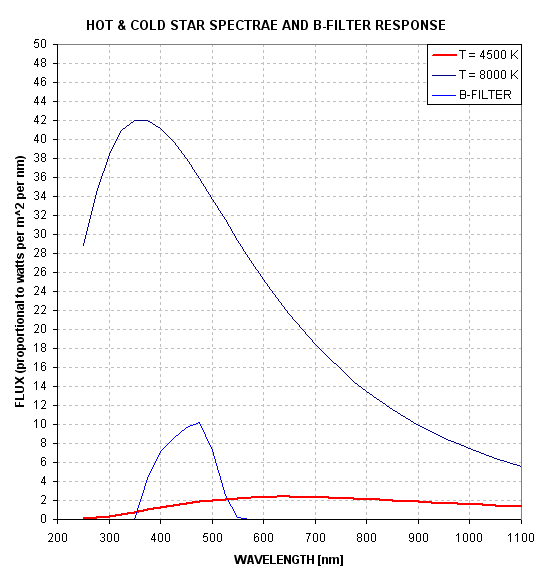

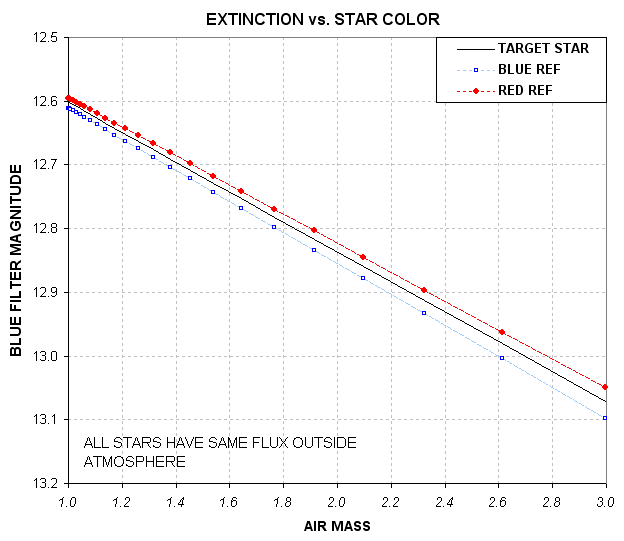

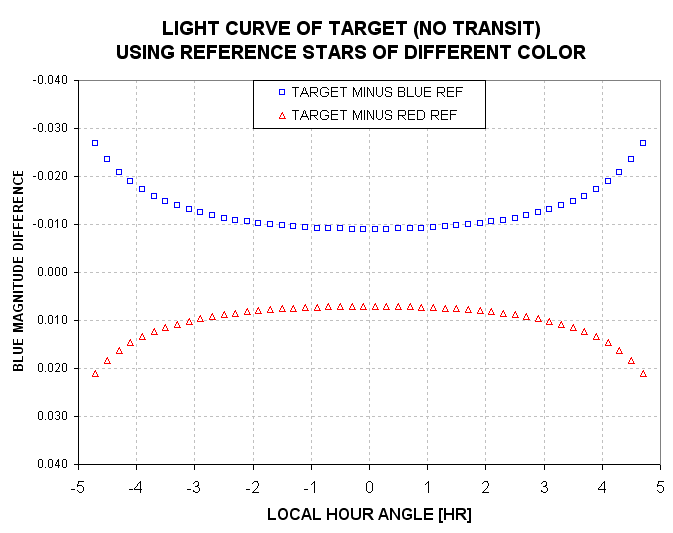

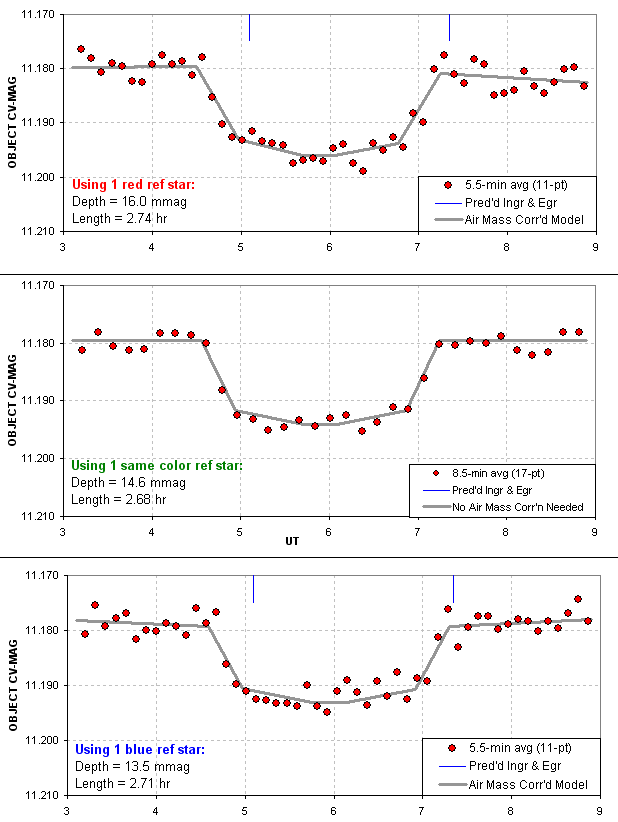

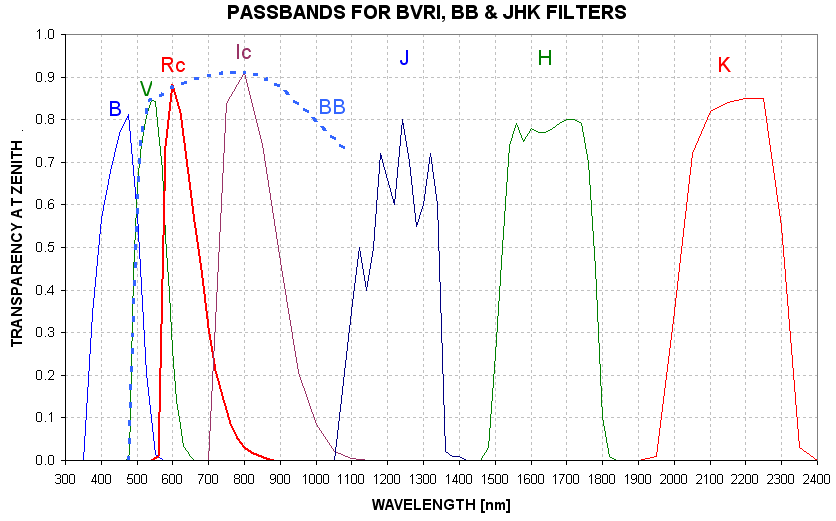

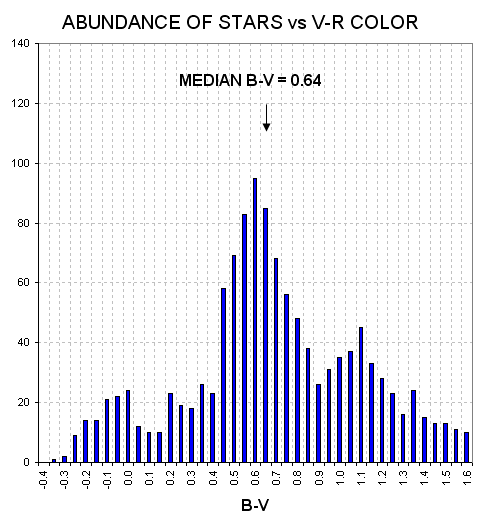

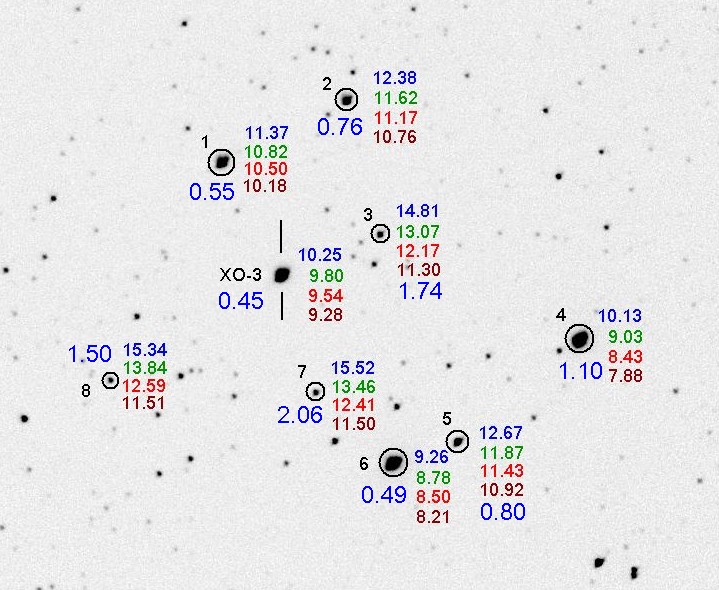

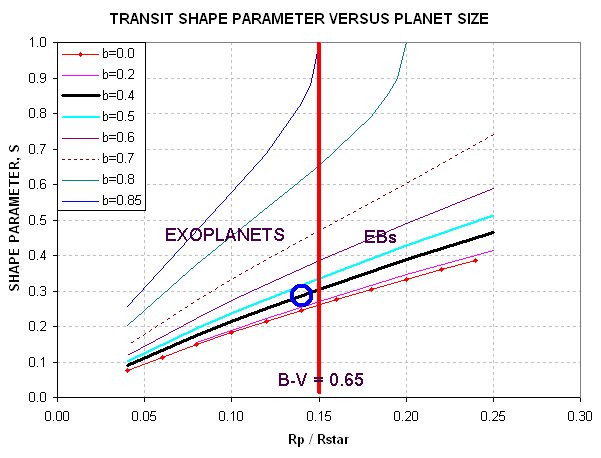

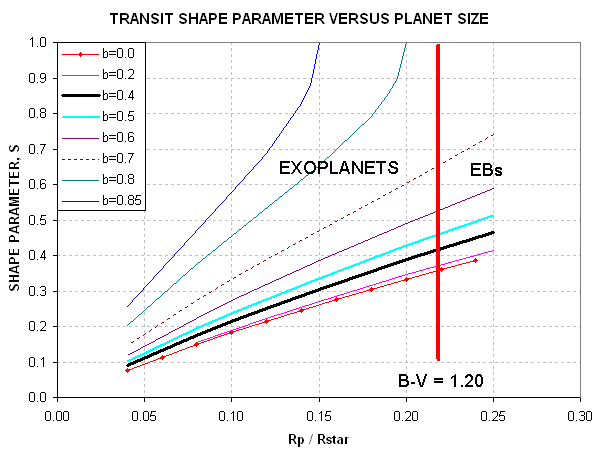

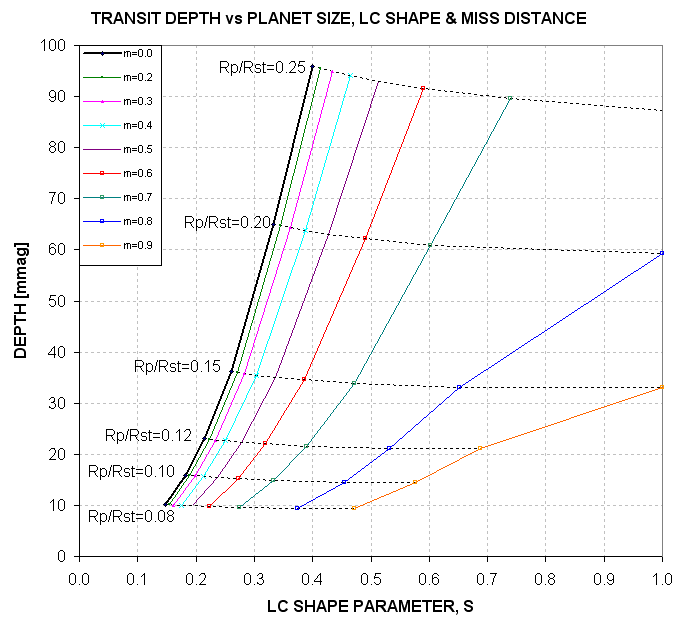

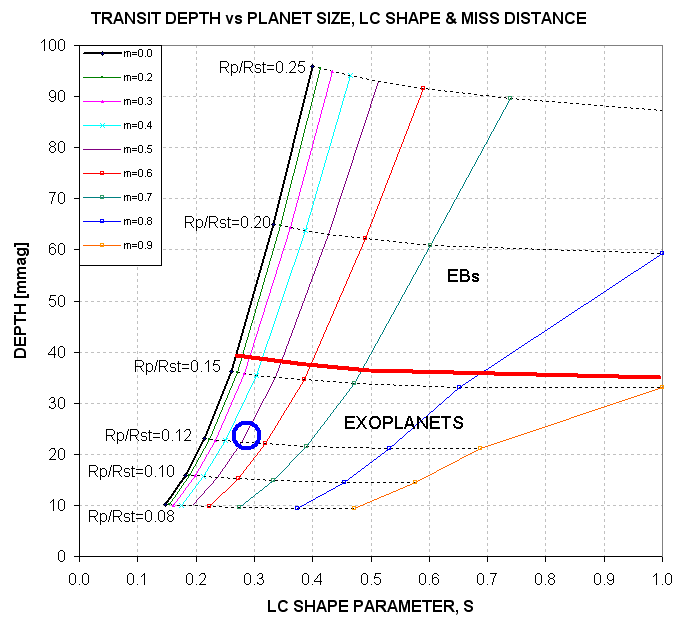

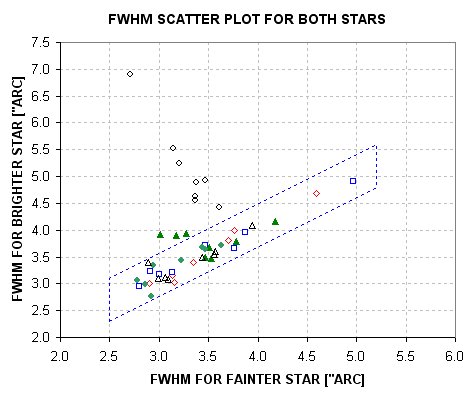

that because observations will start at a low 20 degrees elevation (air